Ingram lets publishers opt out of sales to AI companies. Too bad that won’t work.

Can you block AI companies from training models on your books? Probably not. Even if Ingram pretends to help you.

How AI companies train models on books

AI companies love books. They’re high-quality content, perfect for model training.

The simplest way for an AI company to get illicit access to a book is to break the encryption on ebooks. That’s what Anthropic did (and many other AI companies have also apparently done), and it’s why Anthropic settled a lawsuit for thievery. These companies just get access to a large “corpus” of jailbroken ebooks.

Since that’s pretty clearly a copyright violation, AI companies are using other means. They can take physical books, dismantle them, and scan them digitally. While this sounds cumbersome, there are efficient ways to do it (it’s how Google Books was created, for example). And at this point, there’s no definitive legal opinion on whether its legal. At least one judge has ruled that such use is “transformative,” and therefore legal.

The well-established “first-sale doctrine” says that once a buyer buys a physical instance of a creative work, such as a book, they can do pretty much what they want with it. They can sell it. They can burn it. They can take it apart and turn it into a collage. They can’t scan it and post it online, but the first-sale doctrine could be interpreted to allow them to scan it for their own personal use.

Now Ingram will allow you to block the sale

Ingram, the largest book distributor, will now allow a publisher to opt out of sales to AI companies. The reasoning goes, if the publisher doesn’t want its books used to train AI, the distributor will simply not sell to companies like OpenAI, Anthropic, Google, or Microsoft. (Distributors don’t generally sell directly to companies other than bookstores or warehouses anyway, but now they’ll promise not to sell to such companies.)

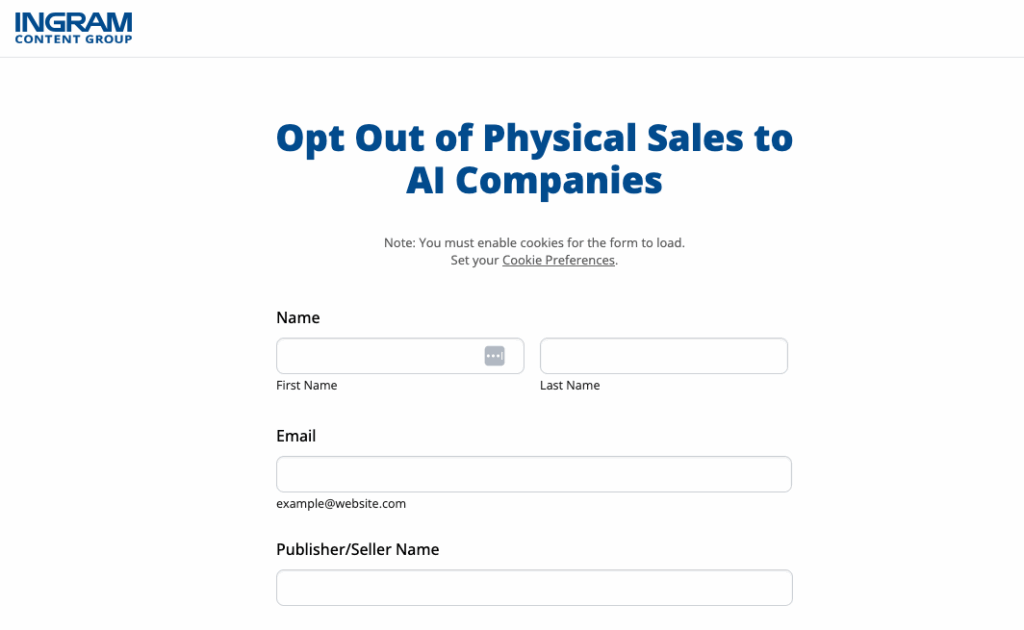

Any publisher who wants to opt out of such sales can do so with this form. Only publishers can opt out, not authors. (Ingram has no relationships with authors, only publishers.)

Here’s a portion of Ingram’s letter to publishers:

[W]e recognize that some publishers/sellers may prefer to restrict the sale of their products for AI training.

At Ingram, we are committed to transparency and to honoring our clients’ preferences. While we may not know the purpose of a given transaction, we will make reasonable efforts to honor your wishes. If you would like to opt out of Ingram knowingly selling your products directly to AI companies for model training purposes, please complete this form.

Please note that opting out will exclude your entire catalog from sales to AI Companies, and your opt out action will govern all future sales to AI Companies. If you have any questions or would like to discuss this further, please email your Ingram contact.

Why this is pointless

Ingram’s exercise is futile. It won’t work.

Here are just a few of the countless loopholes in this scheme.

- Imagine that I start a company called “Scan Corp”. Scan Corp buys copies of books and scans them, then shares the scans with an AI company for training purposes. There could be hundreds of scanning services like this. There is no way for Ingram to identify and block all of them.

- Suppose that the publisher Penguin Random House opts out of distribution through AI companies with this form. A bookstore called “Shady Books” buys copies of Penguin Random House books like Atomic Habits through its distribution arrangement with Ingram. Shady Books then sells the books to Google, or to a Google employee. Ingram can’t tell Shady Books who to sell to, and has no way to stop this. (Is a bookstore really going to vet who it sells to? Bookstores don’t behave this way.)

- In fact, anyone could be straw buyer for AI companies. Ingram has no way to know that you’re buying books only to resell them to OpenAI or Anthropic.

- AI companies can buy secondhand books at a 90% discount from used book distributors. Ingram has no say in such transactions.

Ingram’s opt-out is performative. It make Ingram seem as if they care. It allows publishers to appear to take action. I suppose it has symbolic value. But it’s practical impact will be nil.