The big AI ripoff

Books are higher quality writing than random crap on the Internet. So it’s not surprising that AI developers find books to be an attractive resource for training tools like ChatGPT and Meta’s LLaMa.

Of course, the problem is that most recent books are copyrighted, and therefore it’s not easy to find their text online, at least in places that are legal and accessible.

But as Alex Reisner reported in The Atlantic, the AI models have solved that problem. There is a dataset called “Books3” that contains over 170,000 books; according to Reisner, it’s been used to train Meta’s AI tool.

If you’re wondering if your own book is in Books3, you can now find out. Reisner has now made Books3 searchable by author name at The Atlantic’s website here (gift link).

Two of my books are in AI training sets

Wondering what’s in there? I searched for books I’ve been involved with and people I’ve worked with. Two of good pal Phil Simon’s books are in there: Analytics: The Agile Way and Too Big To Ignore: The Business Case for Big Data. There are a slew of books by the late, great, prolific Isaac Asimov:

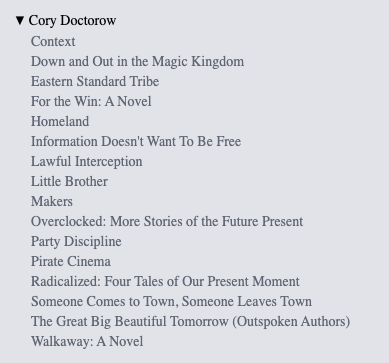

It’s unlikely that Cory Doctorow, author of “Information Doesn’t Want To Be Free,” is going to take being ripped off by big tech sitting down:

Malcolm Gladwell isn’t known for his laissez-faire attitude about his prose either:

I’m nowhere near as famous as those guys, but a couple of my books are in there, too:

Should I be upset?

I’d written that the legal case against AI tools that scrape the web is weak. But when I wrote that, I didn’t realized that these books weren’t just lying around on pirate sites, they’re collected into a huge, neat bundle of pirated material accessed with deliberate foreknowledge of the violation of copyright. That seems like a much easier case to make: “Fair use” can’t stretch to encompass bundling 170,000 copyrighted books for easy ingestion to train a model.

But let’s put aside the legal question for a moment. There is a moral question here for authors: are you okay with this? Some authors, most notably Jeff Jarvis, have said that if training AI on their books improves it, they’re fine with it.

I share Jeff’s perspective on this: I too don’t want to be left out. If ChatGPT or another model can read Writing Without Bullshit and then write less bullshit — not guaranteed, of course, but we can hope — that might be a good thing.

But the problem I have here is with permission.

I didn’t get asked if my books could be scraped and dumped into Books3. Nor was I asked if those books could be used to train the model.

Tell you what. Pay me (and all the rest of the authors) a buck a book and I’ll let you use it for training purposes.

Regrettably, there’s no efficient method to license a book in this way. Rather than create one, the builders of AI tools just ripped us off without asking.

“Move fast and break things,” right? Where would Airbnb, Uber, or Tesla be if they’d carefully followed rules and regulations?

But it feels different when I’m the one being ripped off. So I’m afraid I can’t be as charitable as Jeff Jarvis here. If you build a deliberate book copyright ripoff machine, you’ve violated an ethical boundary.

This may slow the development of AI, but ultimately, I think this level of mischief is not going to be legally defensible.

Thanks for this post, Josh.

The legal issue is significant. I believe that more companies will follow WalMart’s lead and build their own mousetraps with proprietary data and tech.

I suspect that lawyers who focus on the intersection of artificial intelligence and intellectual property will be busy for decades.

Three of my books are there as well. I’m surprised because two of those were published more than a decade ago.

How did they get there?

Would someone ever buy a copy of a book and scan it? Would that make authors feel better?

The Books3 people downloaded ebooks and used software to break the encryption.

Easy DMCA case then, if y’all can prove that! Copyright in the US is crazy, but if it’s going to be like this, might as well use it.