Beyond AI prejudices; Forbes contributors ejected; library grants restored: Newsletter 10 December 2025

Newsletter 128. How thinking proponents and opponents of AI can come together. Plus, AI author scams catalogued, bookseller IPOs, three people to follow and three books to read.

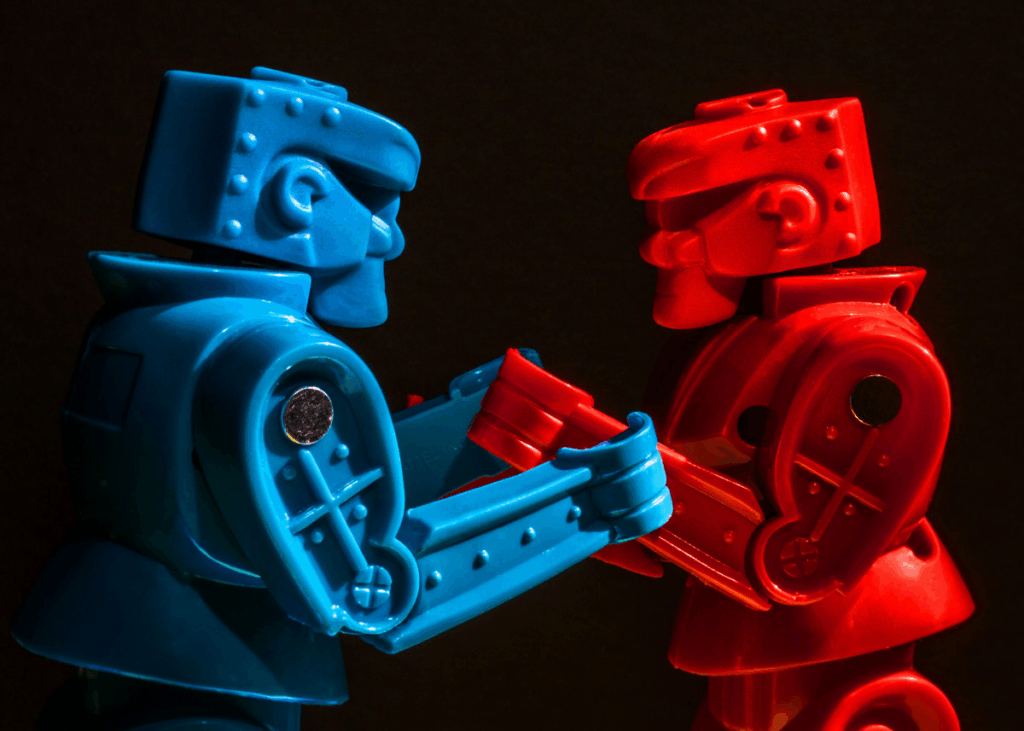

Creating peace between the AI factions

If one result stood out from my recent study of AI and the Writing Profession (sponsored by Gotham Ghostwriters), it was the pervasive hostility that now exists between writers who are AI proponents and those who object to it. It’s a uniquely divisive issue.

When other technologies emerged — PCs, spreadsheets, email, ecommerce, social media, smartphones — there were always early adopters and tech objectors. But I don’t recall email nonusers expressing disdain for email users, or smartphone holdouts calling iPhone buyers psychopaths. Sure, there was a certain low-level annoyance visible between the adopters and the refuseniks, but not hatred.

But as we riffled through the open-ended comments on the survey, we were treated to sentiments like these from the objectors:

It is removing human thought from the writing process. I can spot AI writing and even AI-outlined writing from a mile away because it is voiceless, empty, derivative slop. Of all the ways to rapidly accelerate climate catastrophe, this one has to be the stupidest.

Is “doom loop of computers writing regurgitated soulless garbage only to be read/summarized by other computers while humanity gets dumber and dumber and loses its ability to read, write, think and care” overly pessimistic?

I reckon it’s going to totally fuck [the writing profession]. The romance and art will be sucked out of it. Humanity will take one giant leap towards becoming a vanilla, soulless corporate fucksicle, bereft of soul and heart.

Meanwhile, the AI users wax poetic about their productivity:

Today alone I edited 5 chapters, made 3 book covers, summarized meeting notes, put together a Jira workflow, and still had time to go out for dinner with my family.

Human paced [writing] is a book a year and I have ideas for 60 books at least. I want to explore all these worlds in my head and share them before I die.

[I use it as my] emotional support robot [to] encourage me to keep going.

I know people in both camps. And there is room for a meeting of the minds — but it’s going to take conscious effort. And until that effort happens, all this anger is going to generate far more heat than light.

The first step is stop caricaturing each other.

Based on our survey, only 7% of writing professionals use AI to generate text that will be published (and only 1% do that daily). The most common uses are mundane tasks like searching, using AI as a thesaurus, suggesting titles, and transcribing audio. People have always used technology to replace mundane tasks like spell checking and footnotes. Sophisticated AI users have figured out how to load up all their notes on a project and then query it. Characterizing writers who use AI as purveyors of boring and tedious slop is like any other prejudice: a shorthand way to tar a whole cohort of people for the actions of the worst among.

The same bias also goes the other way. Despite the colorful objections in the survey (we were querying a group with excellent command of the language, after all), most of the objections were reasonable. People worry about unchecked hallucinations, which are a real problem. They also worry about environmental damage, a topic that deserves further study with current research illuminating both sides. And the problem of people, especially students, outsourcing their reasoning to a machine and losing the habit of critical thinking is one we should all be concerned about. Just as the AI users mostly aren’t publishing random AI-generated crap, the AI objectors aren’t storming the data centers with pitchforks.

What we need are ground rules and dialogue.

By ground rules, I don’t mean that there should be laws covering AI — the technology moves to fast for that. I mean that in any context, there has to be some dialogue and some conventions about what’s allowed and what’s not.

I’ve already published a checklist for book collaborators, like ghostwriters and authors. In a media organization, there must be stringent rules for verifying what’s published and what’s allowed for AI-generated graphics. In a corporate setting, workers who write need guidelines on what uses are prohibited (for example, I’d prohibit publishing raw AI output n corporate documents) and what responsibilities writers have for verifying the truth of what they share. Wiley’s recently published AI rules are a step in the right direction; the American Association of Publishers needs a standing committee suggesting common rules for the whole industry.

As far as I can tell, the major purveyors of AI tools — Open AI, Microsoft, Google, Meta, X, and Apple — have invested 90% of their efforts in technology competition, 10% in lobbying, and approximately zero in helping users adopt AI principles. Microsoft’s Responsible AI Standard hasn’t been update since 2022, which is basically the 19th Century given the speed of change here. Google’s most easily located AI principles are about how it behaves, not how we should behave. It’s pretty typical for tech companies to unleash their cool technologies on the world and let the market figure out how to use them, but this is not time for a free-for-all. We need thoughtful guidance.

When my sponsors and I undertook our research on AI and writing, we started from as neutral a position as possible, in the hopes of finding the most honest set of truths about AI attitudes unpolluted by prior bias. Anyone hoping to create harmony and progress in the workplace or the marketplace needs the same attitude.

I’m a lot more worried about how we treat each other on this topic than I am about Skynet achieving consciousness and taking over. In the end, we’re all trying to get useful work done with the best tools available and managing the problems those tools create. The more we can honestly understand what our fellow human beings are doing, what they’ve accomplished, and what they’ve learned, the more progress we can make and the more easily we can manage the side effects.

That’s going to take work. I’m ready to contribute. How about you?

News for writers and others who think

Electric Lit catalogues the flood of absolutely convincing and totally disgusting AI-generated scams now flooding authors’ inboxes.

Randy Rainbow satirizes our new fact-free government health recommendations to the the tune of “Cabaret.” Funny. Until your baby gets hepatitis, that is.

As the result of a lawsuit, the federal Institute of Museum and Library Services restored grants that had previously all been cut by the Trump administration.

Forbes keeps cutting more of its bullshit “contributors.” It’s basically an unmoderated collection of self-promoting bloggers. I wonder what the ones who got ejected did wrong?

James Daunt, who has a management role in both the UK’s Waterstones and Barnes & Noble, told the BBC that a public offering of the bookstore chains is almost inevitable.

Half the novelists in the UK think that AI will replace them, according to The Guardian.

Three people to follow

Eric Koester , founder and CEO of manuscripts.com, an innovative hybrid/book coaching venture

Derek Newton, founder of VerifyMyWriting.com, a highly accurate service for detecting AI-generated content

Stefanie Manning, Publisher and Managing Editor of Maine Trust for Local News, protecting Maine’s newspapers from the clutches of private equity

Three books to read

The Unexpected Power of Boundaries: Rethinking the Rules, Risks, and Real Drivers of Innovation by Sheri Jacobs, FASAE, CAE, AAiP (Amplify, 2026). How boundaries unlock creativity.

Life on a Little-Known Planet: Dispatches from a Changing World by Elizabeth Kolbert (Crown, 2025). A chronicle of our environment and how it’s fading away.

Cat on the Road to Findout by Yusuf Cat Stevens (Genesis, 2025). The autobiography of Cat Stevens, who became Yusuf Islam.