You and your collaborators need to agree on AI guidelines. Here’s a checklist.

Authors I work with have used AI tools in dozens of different ways. There are no fixed rules — at least not yet.

But it’s been my experience that close collaborators — such as coauthors; an author and ghostwriter; or an author and a developmental editor — need to agree on what uses of AI are allowed and what uses are prohibited.

The overarching rule is simple: your close collaborator should never be surprised that you used AI. If you’ll forgive a crude metaphor, you wouldn’t bring a sex toy into the bedroom. unless you and your partner had discussed it ahead of time. Similarly, I don’t think AI robots belong in the intimate intellectual relationship between coauthors unless both parties have agreed on what’s appropriate.

I’ve experienced these sorts of surprises a lot lately. They tend to puncture the necessary trust that close collaborators need to work together.

Agreement on these issues is harder than it looks. For example, I’m working with a technically sophisticated author who frequently uses AI for all sorts of purposes. He had his lawyer draft an agreement for our work together which was full of entirely reasonable clauses — except the one that said I was prohibited from using AI! The lawyer, unaware of the details of our working relationship, had included a standard clause intended to ensure that I didn’t pass off AI output, which can’t be copyrighted, as work intended to be published. We fixed it by prohibiting only the AI uses that would cause problems. This sort of misunderstanding is a common result of the confusion that exists because there are no rules for this stuff yet.

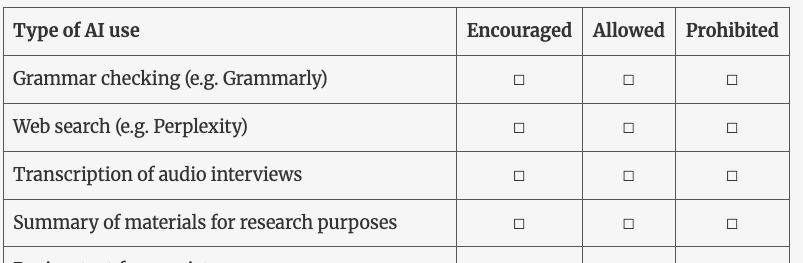

Use this checklist to ensure you don’t surprise your collaborators

Here’s a list of ways that people use AI. You and your coauthor, editor, or ghostwriter should review this and check off the ones you think you might use — and which ones are prohibited. My feeling is that this ought to be symmetrical: you and your collaborator should be allowed or prohibited to use the same set of tools and use cases. (It makes no sense, for example, if one of you can do AI-supported research and the other can’t.) Check one box in each row.

| Type of AI use | Encouraged | Allowed | Prohibited |

| Grammar checking (e.g. Grammarly) | ☐ | ☐ | ☐ |

| Web search (e.g. Perplexity) | ☐ | ☐ | ☐ |

| Transcription of audio interviews | ☐ | ☐ | ☐ |

| Summary of materials for research purposes | ☐ | ☐ | ☐ |

| Review text for consistency | ☐ | ☐ | ☐ |

| Generate first drafts of text content | ☐ | ☐ | ☐ |

| Generate outlines or summaries | ☐ | ☐ | ☐ |

| Suggests words or appropriate terminology | ☐ | ☐ | ☐ |

| Deep research reports | ☐ | ☐ | ☐ |

| Assess reading level | ☐ | ☐ | ☐ |

| Generate graphics | ☐ | ☐ | ☐ |

| Clean up formatting or citations | ☐ | ☐ | ☐ |

| Generate text intended for publication | ☐ | ☐ | ☐ |

| Verify AI content accuracy | ☐ | ☐ | ☐ |

| Upload project content to projects or repositories (ensuring that content is not used for AI training) | ☐ | ☐ | ☐ |

| Upload content without safeguards | ☐ | ☐ | ☐ |

| Create a shared AI project for collaboration | ☐ | ☐ | ☐ |

Some of these answers seem obvious to me. Using AI for research is quite common and given the AI summaries at the top of Google searches, hard to rule out. On the other hand, it’s always a bad idea to upload content without safeguards or use AI to generate (uncopyrightable) text intended for inclusion in published content. But many of the other use cases will seem fine to one collaborator but unacceptable to another.

How to use this checklist

Each collaborator should fill out the checklist, then you should compare. Where there are differences, it’s worth discussing why you disagree and come to some sort of meeting of the minds. The discussion will be most productive if you can explain why you expect one use case or another to be helpful.

If you plan on using a technique that’s not on this list, that’s worth noting and discussing as well.

You might decide to just include the agreed list in a shared document. But if you have a legal collaboration agreement, which is common especially when there’s a commercial relationship, it’s worth it to include the agreed-upon checklist in the legal document.

Given how AI capabilities are changing — and how writers’ skills are evolving — it’s also worth coming back to this throughout the project. It’s not only possible, but likely, that you’ll discover new uses and need to agree if they’re appropriate for your project or not.

One more thing: Publishing agreements these days often include clauses that prohibit AI use. As is typical with publishers, these agreement often miss subtleties; for example, in the interests of prohibiting AI-generated text for copyright reasons, they may appear to prohibit AI brainstorming as well. If you’ve filled out the checklist, you’ll know what exceptions to negotiate in the publishing agreement.

I’m curious. Has anyone else had misunderstanding about this with collaborators, as I have multiple times? Are there use cases I missed that ought to be included in this checklist? And have you had challenges dealing with AI in your publishing contracts?

I look forward to learning from your experience.

I’d add a column for “discouraged” between “allowed” and “prohibited.” The AI assistant attached to Microsoft Teams did an impressive job transcribing and summarizing a couple recent meetings, so I’m not opposed to all applications for it. But I can’t in good conscience accept uncited sources, untested claims or unedited prose.

Does user intent figure into any of this? I’m meaning intent such as: I use an AI option with the intent of swiftly revealing inconsistencies or repetitions. My intent is to reliably reveal such issues, potentially cutting time spent, thus saving costs to my author collaborator.

Conversely, the author uses an AI option to combine several ideas to produce a particular point or concept, or to produce a wholly new point or concept the author hasn’t created originally. The intent is to produce writing beyond the author’s current skill level or creativity.

Over the past 10 months or so, I have encountered endless AI uses by author collaborators, who seem to hold multiple, and even conflicting, ideas about what’s okay and what isn’t. The general thinking I’ve observed is that until someone says “no”, it’s okay to muddle original thinking/writing with whatever AI will absorb, agitate, and urp out.