Wiley’s AI Guidelines: A solid step in the right direction

Publishers and authors are panicking about the appropriate uses of AI. It’s a nuanced problem, because AI use creates issues of quality, copyrightability, and disclosure. Wiley, publisher of vast numbers of business books, has now published its extensive guidelines online for all to see.

Wiley’s guidelines are a great start

Given some of the shortsighted takes I’ve seen about AI and writing from supposed experts — and some obviously misguided and impractical clauses in publishing contracts — my expectations were low. I was pleasantly surprised to see that Wiley’s guidelines are balanced and practical. They’re also 6,300 words long, because there’s a lot to cover. They’ve covered nearly all of it in an appropriate level of detail. The advice is strategic rather than being highly specific, which is the only possible approach in a field that is developing this rapidly.

Partly because of the rapid development of the tools, many of the guidelines are too vague to be actionable. There’s clearly more work to do here, but some of that will have to wait until the tools themselves become more mature.

Wiley’s approach reflects the right attitude

Here’s the general guideline at the top of Wiley’s guidance:

Authors may only use AI Technology as a companion to their writing process, not a replacement. As always, authors must take full responsibility for the accuracy of all content and verify that all claims, citations, and analyses align with their expertise and research. Before including AI-generated content in their Material, authors must carefully review it to ensure the final work reflects their expertise, voice, and originality while adhering to Wiley’s ethical and editorial standards.

This is perhaps the most important instruction. Simply put: AI does not absolve you of the responsibility to ensure accurate content and give appropriate credit to the work of others. It’s a tool, not an excuse. Given AI’s tendency to hallucinate content to please its users, this is an essential guideline. You should have it tattooed on the backs of your hands for viewing as you type.

Regarding disclosure, Wiley’s general statement is also on the right track:

Authors should maintain documentation of all AI Technology used, including its purpose, whether it impacted key arguments or conclusions, and how they personally reviewed and verified AI-generated content. Authors must also disclose the use of AI Technologies when submitting their Material to Wiley. If not provided, your Wiley contact may request this documentation. Transparency is essential to Wiley’s commitment to ethical publishing and integrity.

If you use AI, you should be clear about how you use it and prepare to include a statement to that effect in what you write. Wiley’s guidelines include tips on how to document your AI use. I suspect most writers will find them onerous, and then later, when they need to recall what they did, regret that they didn’t keep better records.

The “terms and conditions” conundrum

Wiley includes this guideline near the top of their document:

Authors should carefully review the terms and conditions, terms of use, or any other conditions or licenses associated with their chosen AI Technology. Authors must confirm that the AI Technology does not claim ownership of their content or impose limitations on its use, as this could interfere with the author’s rights or Wiley’s rights, including Wiley’s ability to publish the Material. Authors should periodically revisit the AI Technology terms to ensure continued compliance.

Regrettably, this instruction is both essential and impractical. No one has the patience to carefully review all those terms and conditions. They’re generally written in opaque legal language. Unfortunately, it’s certainly possible that an AI tool’s terms and conditions could interfere with the author’s rights as Wiley warns, for example, enabling content to be shared with others for training purposes or asserting rights to it. And “Authors should periodically revisit the AI Technology terms to ensure continued compliance” is impossibly vague.

The major tools that writers use, including ChatGPT, Grammarly, Google Gemini, and Microsoft Copilot have generally behaved in reasonable ways regarding rights and training, because avid tech-watchers would likely note departures from such behavior and raise a stink. They also tend to include a setting that allows you to prohibit them from using your content for training purposes. It’s certainly worth tracking this issue. Unfortunately, there’s no good answer to the question “How far can you trust big tech companies?”

If you use a less common tools, I’d certainly take Wiley’s advice and read the terms carefully. If it seem like a miraculous solution to some problem you have, you may also discover that it’s hoovering up rights to your content in ways that you and your publisher would find objectionable.

What is “responsible use?”

Here’s another maddeningly vague instruction in Wiley’s guidelines:

Authors must use AI Technology in a manner that aligns with privacy, confidentiality, and compliance obligations. This includes respecting data protection laws, avoiding the use of AI to replicate unique styles or voices of others, and fact-checking AI-generated content for accuracy and neutrality. Authors should be aware of potential biases in AI outputs and take steps to mitigate stereotypes or misinformation. When inputting sensitive or unpublished content, authors should use tools with appropriate privacy controls to protect confidentiality.

An intelligent writer’s reaction to this is “Sounds great, but how the heck am I supposed to do this?” Good question.

Wiley is basically covering their butt with this instruction. If you use AI in a way that they later determine is inappropriate, illegal, or unethical, they’ll cite this and say you should have known better.

Best practices

Wiley’s guidelines include a number of very helpful best practices. These are instructions well worth following. Here are a few:

When using AI tools in your writing process, focus on ways they can enhance rather than replace your voice. . . . Use AI for specific tasks with clear objectives—this approach keeps you in control of the final work and allows for a systematic review and refinement of any AI-generated content.

In other words, be a writer using AI tools to help, not a person who just copies AI slop. I agree.

Useful ways to utilize AI tools and maintain your creative voice include:

- Analyzing and identifying patterns or themes across research materials

- Exploring different approaches to explaining complex topics

- Adapting examples to suit different audiences

- Polishing and reviewing your work

This is fine, but there is so much more than a writer can do. You can load up all your content and use AI to navigate the repository. You can set up agents to find useful material. The most important missing instruction here is: be iterative. The first thing an AI generates in response to your query is likely to be inadequate. This is not a sign that it’s useful. It is a clue that you need to refine your prompts to go deeper and fix the flaws that generate the inadequate responses.

This general instruction is a great way to get started:

Break your process into specific steps so you can identify areas where AI might be most useful. These could be smaller, low-risk tasks or tasks that you often procrastinate working on. Try testing out how AI tools respond to your needs to assist with these small and specific tasks. It is helpful to think of the AI tool as a junior assistant rather than a peer or expert and to prompt it as you would someone with less experience and knowledge of your needs.

Regarding research, this suggestion stops far short of what’s useful:

Use AI to summarize research papers, identify recurring themes in literature, or analyze trends across multiple sources. When using AI for literature search, always verify sources independently, as AI-generated references may be inaccurate or non-existent.

There’s a lot more that can go wrong here than non-existent sources. I find that the most likely type of hallucinations in AI-powered research are instances where the AI tool says “Source X says Y,” when in actuality, Source X says something quite different from Y. Never trust an AI tool’s interpretation of a source. Get the link and read it yourself, starting with the abstract, which is far more likely to be accurate. AI (especially search tools like Perplexity) is great for identifying sources, but as a researcher you must verify what they say.

Wiley includes these specific instructions for verifying content, which I support:

- Identify all information requiring verification specific statistics, technical terminology, methodological descriptions, citations, and any factual statements

- Verify each of the above elements against authoritative sources in your field before inclusion in your manuscript

- Consider having colleagues or peers review technical content in their areas of expertise

Wiley also suggests this very useful way to use AI as a writing helper:

Use AI to refine phrasing for clarity and conciseness, suggest alternative word choices, identify redundant content, or improve transitions between sections. For technical writing, AI can also help ensure terminology consistency. For authors writing in a non-native language, AI can assist with ensuring natural phrasing and aligning with the expected tone and style of academic or professional writing.

It’s an awesome thesaurus and a decent writing coach. Using it that way will improve your writing.

Warnings about copyrightability

AI output is not copyrightable. Regrettably, whoever wrote this didn’t understand what’s wrong with a dangling modifier, but the spirit is correct:

As a book author, it’s important to understand how copyright protects your work. . . . Because copyright protection generally requires human authorship, AI-generated content without substantial human modification may not qualify for copyright protection.

Their suggestions for how to do this aren’t bad:

- Make significant revisions to any AI-generated content, beyond basic editing, to reflect your unique expertise, your original, creative expression, analysis, insights and conclusions

- Adapt, modify, customize, and transform AI outputs to match your writing style and voice

- Integrate AI-generated elements thoughtfully into your larger work

- Make creative decisions about the selection and arrangement of the material in your work

- Ensure the final work clearly reflects your expertise and your original, human-authored, creative contributions and decisions

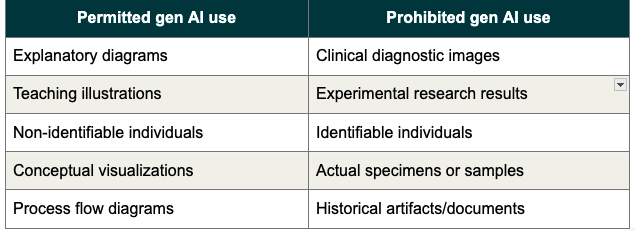

Wiley has specific and useful instructions on using AI to generate graphics

This is good advice:

AI-generated explanatory images must be accurate and not misrepresent information. You must verify that AI-assisted illustrations effectively communicate key concepts before they are used in Wiley publications. Review final images to ensure accuracy. Let your Wiley contact know if your images are final in your manuscript or are drafts to be recreated by a visual design professional.

I might add that AI-generated graphics often include incomprehensibly misspelled words in them, and you really need to check that. And here’s Wiley’s helpful table about what’s permissible for AI-generated graphics and what’s not:

AI for auxiliary materials: a nice idea

Wiley suggests using AI for educational and marketing materials, for example:

- Practice questions and answer keys

- Examples or step-by-step solutions

- Instructor guides and lesson outlines

- Scenario-based learning activities

- Engagement strategies for interactive exercises

But keep in mind that any BS it invents may drive your students crazy, and they’ll rightly blame you.

Suggested disclosures

Don’t use AI and then hide what you did. Document it instead. Here’s what Wiley suggests:

When declaring AI use, clearly specify:

- The location of the AI-supported content

- The AI tools and versions used

- The purpose of the AI tool

- Your process for validating the information

- Whether the AI tool altered your thinking on key arguments or conclusions

That sounds good in principle. But anyone working on a book knows there are thousands of tasks involved. Documenting each specific use will rapidly get tedious. This might be impractical to track at the suggested level of detail.

Conclusion: Don’t limit yourself to Wiley’s guidelines

Wiley is soon going to find out that it’s November 2025 guidelines have become out of date. This needs to be maintained, and I hope they have the stamina to do so.

There is no reason that this set of guidelines should vary from publisher to publisher. I hope that these guidelines become a starting point for cross-publisher standards. It’s certainly going to be easier for authors if the guidelines are a common across the industry, in the manner of a standardized style guide such as the Chicago Manual of Style.

In the meantime, excellent writers will follow these general rules:

- Use AI to save time, not to substitute for your skill as a writer.

- Don’t trust AI output. Verify everything it tells you.

- Don’t ever publish raw AI output. It’s not just that such output can’t be copyrighted. It’s also likely to be boring or clichéd.

- Document your tool use. Don’t hide it. The only thing worse than reading AI-generated slop is finding out that the author tried to fool you into thinking it was their own work.

- Keep up on the state of the art. AI is changing. Keeping track of those changes — both the useful ones and the dangerous ones — is part of your job as a writer.