Beyond boring statistics

I’m digging into the data we’ve collected so far in our survey about AI and the Writing Profession, and I thought you might be interested in how survey analysis professionals like me work to generate insights from such data. (The survey is still open until September 4, if you’d like to add your own perspective.)

It’s all about the questions you ask.

The simplest, most straightforward questions yield boring answers. For example, this survey will be able to answer questions like:

- How many writers use AI to help with writing tasks?

- What tools are most popular?

- How concerned are they about problems like hallucinations and the environmental impact of AI?

The answers to those questions will be an incidence number, for example, x% of writers use AI. But that number will be both boring and of limited utility. It will be boring because, standing alone, it adds no perspective. And its accuracy depends on how representative our sample is, and that’s very hard to measure.

Better questions combine insights and offer comparisons

Instead of stopping with simple incidence numbers, I’m looking into questions like these:

- Which types of writers use AI the most, and which the least?

- How many different tools are employed by a typical writer who uses AI?

- Do people who use AI for many different tasks differ from those who use it for only a few? If so, how do they differ? Are there skews by age, gender, or income, for example?

- How do the concerns about AI differ between those who use it frequently and those who use it sparingly, or not at all?

- Just how real is the phenomenon of writers’ loss of jobs or income due to AI, and what clues are there about how that trend might develop?

Here are a few things to note about these questions.

- To even be in the position to ask these questions, you need to plan for them. That means designing the survey carefully with such questions in mind.

- Comparisons can help alleviate biases. For example, it’s possible that our survey, just by virtue of its name, attracted a disproportionate number of both those who embrace AI and those who hate it. But having collected a lot of data from both groups, I can compare them. Those comparisons are more likely to generate dependable insights than a raw number like “x% of writers are using AI.”

- Because some of these questions require looking as smaller slices of the data (for example, comparing technical writers to copywriters), they depend on a larger overall sample, so that the slices still have enough respondents to be interesting. You can compare slices of a 1000-person survey, but it’s not statistically valid to attempt to compare slices of a 100-person survey.

- It helps to be in a position to define new classifications from existing answers. For example, to define “heavy AI user,” I’m looking at the frequency of use as well as the number of different tasks that the respondent uses AI for.

- Comparisons illuminate in ways that raw statistics don’t. I can answer questions like “How much higher incomes do heavy AI users have then nonusers?” or “How much more likely is a heavy AI users than a nonuser to be concerned about AI tools trained on content without permission?” The result is not an incidence statistic, but a ratio.

The unanswered questions

Surveys insights can only go so far. A survey like this doesn’t tell you the answer to questions like:

- Did AI use generate more pessimistic views on the writing profession? (Surveys are murky on questions of causation.)

- How will attitudes change in the future? (A survey is a snapshot of one moment in time. To answer trend questions, you need a series of surveys.)

- What is the right strategy for media organizations? (We can share facts, but strategy also demands insight into industries and how they interact, which surveys don’t provide.)

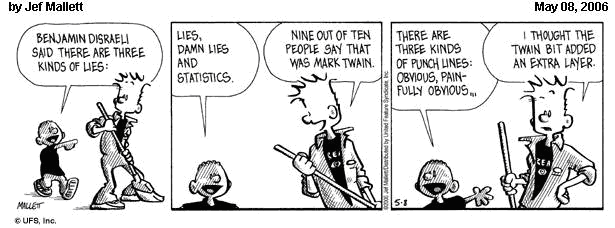

Even so, right now, a lot of people in publishing, media, advertising, and politics are operating on gut instinct about AI and where it’s going, with a heavy helping of anecdotal “data.” Gut instincts are often wrong. The singular form of the word “data” is bullshit. Actual data analysis will fuel more informed discussion.

A final reminder: you still have a chance to be part of this survey, right here. A bigger sample will allow my partners and me to draw better conclusions. We’d be especially interested in more perspectives from some underrepresented groups, including technical writers, PR professionals, and speechwriters.

I promise to share the insights broadly as soon as the analysis is complete. And having dived into the data, I can tell you that there are some pretty interesting patterns. I just need a little more data to make the results as insightful as possible.

“Don’t trust everything you read on the internet.” — Mark Twain