What is AI good for; The Onion swallows InfoWars; fake bear damage: Newsletter 20 November 2024

Newsletter 71. Why technology’s long-term impact is unknowable, HarperCollins pays you to train AI on your books, Robert F. Kennedy Jr.’s imaginary world, plus three people to follow and three books to read.

Everyone predicting the AI future is wrong

I spent last Friday at MIT, attending a conference called “BIG.AI@MIT” (Business Implications of Generative AI @ MIT), an all day-conference put on by Thinkers 50, Accenture, The MIT Initiative on the Digital Economy, and Wiley. It was an amazing collection of talent: academic leaders, business leaders, venture capitalists, and authors including npo less than two MacArthur grant recipients. Here’s a coherent summary of what they believe the future holds for AI:

Hard to say, but it sure is big!

Oh, there was plenty of pontificating and lots of wisdom and foresight, starting with the first panel, titled without a trace of irony, “GenAI: Disruptive Innovation or Paradigm Shift.” (I kept score: paradigm shift won overwhelmingly.)

Tuck School of Business Professor Scott D. Anthony predicted AI would create “a shadow that is going to go over everything we see,” with entire industries upended: consulting, accounting, legal services, education, and ad agencies. He likened the impact of AI to the discovery of gunpowder: the era after AI would be qualitatively very different from what came before.

Columbia Business School Professor Rita McGrath suggested the advent of a “strategic inflection point,” that creates a 10x shift in what’s possible (faster, cheaper, smarter).

H. James Wilson, global managing director of technology research and thought leadership for Accenture, suggested five principles for companies: focus on reimagining business processes around human/machine collaboration; experiment and scale up successes; embrace responsible leadership around making AI systems fair, safe, transparent and explainable; get the digital core (data) ready; and commit to “skilling” your people to use tools appropriate in the new world.

Wilson also pointed out that AI models were improving at breakneck speed, people and organizations slower, and AI policy and regulation slower, left in the dust by the speed of change.

In case that’s not profound enough for you, Sinan Aral , Director of the MIT Initiative on the Digital Economy, opined that “There is a fear that in losing our jobs to AI, we will lose our purpose as a species.”

In 40+ years in the tech-focused working world, I’ve seen multiple waves of disruptive innovation/paradigm transform how we do everything, from PCs to Internet to mobile (and a few that didn’t actually transform everything, like blockchain and virtual reality). The key insights can be summed up by Amara’s Law: “We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.” If you’ll indulge me, I’d like to propose Bernoff’s corollaries to Amara’s Law:

- Even if you know Amara’s Law, there is a strong tendency to overestimate the speed of change, because the most extreme predictions get the most attention.

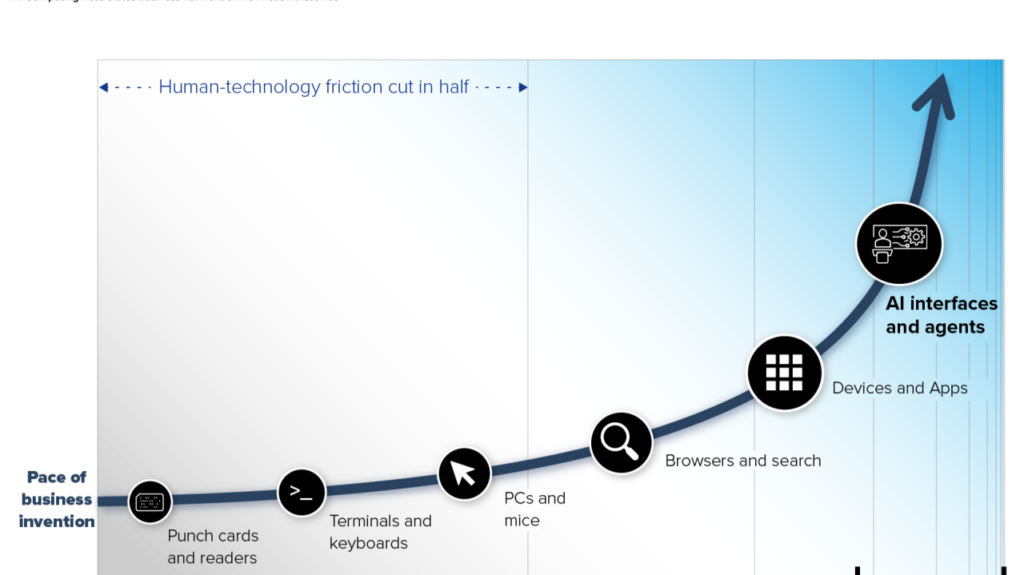

- Change happens only when organizations figure out how to empower people with the new technology. (Thanks to Ted Schadler of Forrester, whose graphic is shown above, for this insight.) This is extremely hard and takes a lot more years than it takes to advance the technology.

- The clumsy, difficult, and problematic early implementations of any technology make “skilling” mainstream people pretty difficult.

- In the long run more of the money flows to the organizations that figure out how to help humans harness the technology, not the creators of the technology itself. (Who made more money from PCs, Microsoft or Intel? Who made more money from the Internet, Google or AT&T?)

- The eventual change is profound. But it’s impossible to predict where it’s going when you’re at the very start of the shift.

Consider a few illustrative examples of Yogi Berra’s quip: “It’s tough to make predictions, especially about the future.”

The PC era effectively launched in 1977 with the Apple II. If you’d asked at that moment, “What are PCs for,” the answer would have been “Hobbyists doing word processing.” It was inconceivable that at the time that every professional worker would be using a portable computing device nearly all of the time, and that they’d all be networked together, and that everything in business would run through those devices.

The internet was created in 1983 so that scientists could share data. Tim Berners-Lee created the web in 1989 for the same reason. Ecommerce began to emerge in the late 90’s, and the cloud effectively launched in 2002 with Amazon Web Services. No one in 1983, 1989, or even 2002 could have given a smart answer to the question “What are the internet and the web really for?”, because the answer was “To be woven into the fabric of all human activities.” (But if at the time you’d guessed “Sharing cat videos,” I would give you points for foresight.)

Mobile phones launched in 1979. If you’d asked what mobile phones were for in the 80s and 90s, the answer would be “phone calls.” Blackberry devices launched in 1999; after that, the obvious answer for what mobile devices were for was “email.” It took until at least 2007 with Apple’s launch of the iPhone to reveal the actual impact of mobile computing: to connect most of the world’s people to all of the world’s information everywhere all the time and generate an addictive experience that threatens to send everyone into a vortex of anxiety and depression.

The grey hairs on my head attest to the experience of all these waves of technology. So during the Q&A period after the first panel, I asked them why they were optimistic about these future predictions, given how challenging it has been to accurately see the future and how, time and again, our hopes were shattered that corporations would embrace technology in a thoughtful way and governments would regulate it with wisdom. The answers shaded into technology determinism. Basically, we need to get this right, so we will.

Right now AI appears to be the world’s biggest and most powerful hammer and everything humans do looks like a nail. At the conference I saw startups using AI to design better drug molecules, assess how to make big buildings more energy efficient, and maximum profits with intelligent approaches to pricing. These are some pretty interesting nails. You can also use AI to rewrite the Telsa owner’s manual in the form of a sonnet and make videos of Joe Biden and Donald Trump riding off into the sunset together.

It’s going to be big. But right now we’re just hitting things with a really big, expensive hammer. Expect a lot of busted fingernails until we figure it out . . . and be very suspicious of anyone who claims to know what that looks like.

News for writers and others who think

MIT Technology Review announced the AI Hype Index, rating various AI technologies on two dimensions of hypiness. “Because while AI may be a lot of things, it’s never boring.”

The Onion bought conspiracy theory-adherent Alex Jones’s InfoWars site out of bankruptcy. Irony is dead.

HarperCollins is offering to split a $5,000 bonus with authors willing to license their books for training for AI. Here’s a skeptical take on that from the Author’s Guild.

Four people apparently defrauded their car insurance company for $142,000 by claiming bears damaged their cars, when in fact the damage was actually caused by a person in a bear costume with fake metal claws. AP story (not The Onion, not InfoWars).

If you’re interested in the truth, you might enjoy reading about the version of it that Health & Human Services cabinet nominee Robert F. Kennedy, Jr. has historically embraced (Washington Post, gift link).

Nielsen, the TV ratings company, has historically been extremely slow to update its measurement methodology to address technology changes in TV viewing. Advertisers and TV networks put up with it, because there was no realistic alternative. But now, as streaming once again implodes the viewing world, Paramount says Nielsen’s ratings are overpriced by a factor of two. Will Nielsen survive this upheaval as it has every other challenge in the last 30 years?

Three people to follow

Dr. Marcia Layton Turner , ghostwriter and ghostwriting expert.

Lily Lyman, general partner at Underscore VC, super-smart East Coast venture capitalist.

Doc Searls , still a provocative thinker 25 years after The Cluetrain Manifesto

Three books to read

Human + Machine: Reimagining Work in the Age of AI by @Paul R. Daugherty and H. James Wilson (HBR Press, 2024 [updated]). A human-focused take on AI in business.

Why Startups Fail: A New Roadmap for Entrepreneurial Success by Thomas Eisenmann (Currency, 2021). How not to step in the potholes that will doom your venture.

Filterworld: How Algorithms Flattened Culture by Kyle Chayka. Did technology destroy taste?

Thank you for your insights (with references) on AI. Another interesting post today in Canada by Don Lenihan: https://nationalnewswatch.com/2024/11/20/when-chatbots-go-rogue-the-good-the-bad-and-the-stupefying.