What attitudes are different between AI users and nonusers?

Yesterday, I announced that we were releasing the results of a survey, sponsored by Gotham Ghostwriters, of 1,481 writing professionals about their attitudes about AI. From time to time, I’ll be examining and sharing data from that survey in more detail.

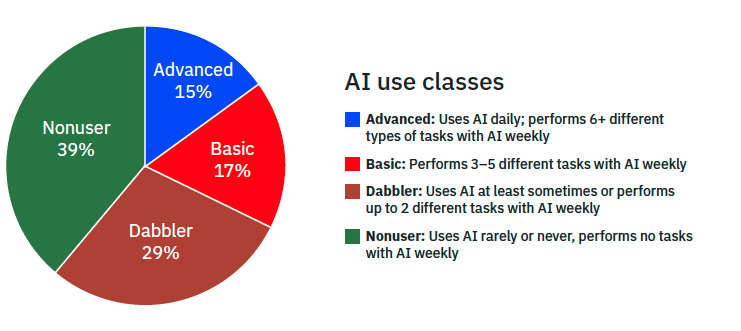

One of the most interesting parts of the survey compared attitudes of different groups of writers, classified by how much they use AI. I classified the writing professionals (excluding fiction writers) into four groups according to this set of criteria.

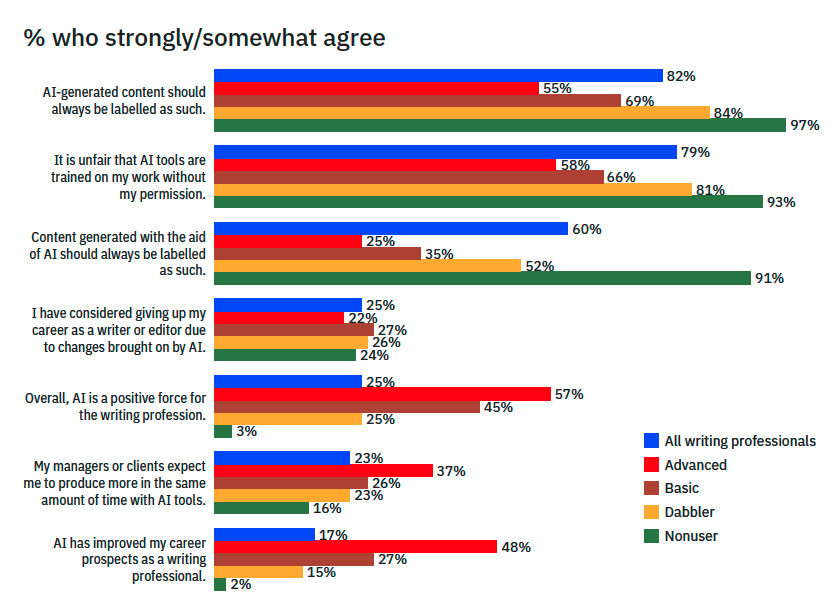

Now let’s look at how much writing professional in each group agree with various statements about AI.

Copyright (c) 2025 Gotham Ghostwriters and Bernoff.com

These answers are sorted by the size of the blue bar, which is the percentage of all respondents who agree with each statement (or more specifically, who checked “Agree” or “Strongly Agree” on a five-point scale).

There’s a clear pattern, which you can see in the stair-step of the four bars under the blue bar on each question. In some cases, there’s rough agreement. Around one in four writing professionals have considered giving up their career due to AI-generated changes. More than half of the writing professionals in each group believe AI-generated content should be labelled (and for context, only 7% of writing professionals in the survey say they publish AI-generated content without further editing).

But other differences are more dramatic. For example, 57% of the advanced AI users and 45% of the basic AI users think AI is a positive force for the writing profession, while only 3% of the nonusers agree.

It’s revealing that 48% of the advanced users think AI has improved their personal career prospects, and proportionally fewer of the less advanced users agree. Clearly, the heavy AI users have found the tools helpful and believe their AI skills will help them get ahead.

But perhaps the most interesting distinction here is on the question of whether content generated with the aid of AI should be labelled. The AI users know that there are plenty of ways to use AI to improve content, including speeding research, finding flaws, suggesting titles, and recommending outlines or structure. The sophisticated users use AI as a tool, but then leverage their own abilities to make the most of that tool in what they write. This is why only 25% of the advanced users think that if you use AI to help create writing, you need to disclose it.

The nonusers have a completely different perspective, with 91% asserting that content created with the aid of AI must be so labelled. That 91% is pretty close to the 97% that want fully AI-generated content to be labelled. The nonusers don’t seem to see much a distinction in whether you used AI to create the text or just to help, while the heavy users see a great distinction between these two use cases.

What it means

It’s going to become increasingly difficult for writers to work completely without AI. For example, Grammarly includes AI features. If an AI tool points out that you’ve got redundant text in parts of your writing and you fix it, must you disclose that you “used AI” to help improve what you wrote?

This will completely change the discussion around labelling in the near future, because labelling content generated with AI help will seem increasingly problematic.

The capabilities of AI tools have raced way ahead of our ability to judge what’s right and wrong for writers. What the survey has made clear to me is that people who don’t use AI at all are not really in a good position to decide in what ways it should and should not be used. And it concerns me that many of the folks deciding on AI regulations may be in that less knowledgeable group.

I’m going to continue to dig into the data, especially around differences in attitudes and behaviors of the heavy users and nonusers. My hope is that this survey allows us to shed some light on what’s actually happening in an environment that has at this point devolved more into shouting than factual analysis of what’s happening in the world.

I suggest that AI-free content be labeled, The analogy is the food industry–e.g., Sugar Free, Gluten Fre.

Cabeat–if you ise tools like Grammarly or Co-Pilot, l refer you to John: 8:3-7.

I don’t use AI, for the usual reasons. But I’ll concede that writers who use it are probably right, and I’m probably wrong. After all, they’ve both used it and not used it. I’ve only NOT used it. So who am I to say what I’m missing?

My work is scrutinized by government regulators and litigious competitors. I trust my subject matter experts and legal team yet still keep records of every decision that involves potential risk. Using AI tools, as executive management wants us to do, would create delays and diminish quality. They’re not accurate enough for anything consequential.

Hi, Josh. Thanks for pulling together this data.

Would you consider changing the colors in the pie chart to match those in the bar charts? I started reading the bar charts without paying any mind to the key alongside them…. Which led me to some puzzling conclusions. 😊

Thanks.

I realized that at some point after the report was published. If I could change one thing, it would be those colors.