Warning labels on social media? It’s the algorithm that’s the problem.

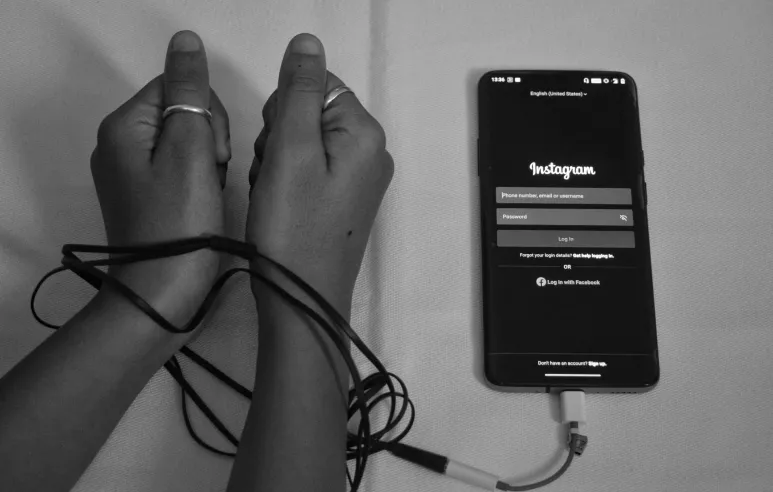

Social media is addictive and harmful to children. There’s plenty of evidence for that. As a result, surgeon general Vivek Murthy has recommended that Congress require a warning label on social media.

Let’s be real. A warning label would do little. The problem’s in the algorithms. And that’s where the solutions, if any should come from.

What Vivek Murthy requested

Here’s some of what Vivek Murthy wrote in a New York Times guest essay yesterday (gift link).

The mental health crisis among young people is an emergency — and social media has emerged as an important contributor. Adolescents who spend more than three hours a day on social media face double the risk of anxiety and depression symptoms, and the average daily use in this age group, as of the summer of 2023, was 4.8 hours. Additionally, nearly half of adolescents say social media makes them feel worse about their bodies.

It is time to require a surgeon general’s warning label on social media platforms, stating that social media is associated with significant mental health harms for adolescents. A surgeon general’s warning label, which requires congressional action, would regularly remind parents and adolescents that social media has not been proved safe. Evidence from tobacco studies show that warning labels can increase awareness and change behavior. When asked if a warning from the surgeon general would prompt them to limit or monitor their children’s social media use, 76 percent of people in one recent survey of Latino parents said yes.

To be clear, a warning label would not, on its own, make social media safe for young people. The advisory I issued a year ago about social media and young people’s mental health included specific recommendations for policymakers, platforms and the public to make social media safer for kids. Such measures, which already have strong bipartisan support, remain the priority.

A symbolic measure at best

Will children’s social media use decline if there’s a warning label on every social media site?

I doubt it.

A survey of 558 Latino parents isn’t enough to convince me. Any parents in a survey are likely to report that they’ll do things to keep their children safe. It’s a lot harder to do those things than to say them.

I do think such a label might help publicize the dangers of social media, causing some parents to take it more seriously. This might lead to more serious discussions between parents and children. But if children, adolescents, and teenagers have mobile phones, they’re likely be tempted by social media. And social media is addictive. So unless you can restrict phone use, it’s pretty hard to stop social media use.

And I believe teens (and most adults) are powerless in the grip of social media. They’re addicted to the dopamine hits that come from likes and shares and comments.

It’s a real problem. This is just the wrong solution. Slapping a label on it won’t slow things down much.

The problem’s in the algorithm — and the regulation belongs in there, too

Everything wrong about social media is rooted in the algorithm. Social media is optimized for addiction. It’s optimized for anger and greed and jealousy. The more addictive it is, the more time people spend with it, and the more profitable it is. No amount of lip service, toothless advisory boards, and pro-social chest-beating from Meta, Google/YouTube, TikTok, or X can change that.

I’ve already made one suggestion — a Fairness Doctrine intended to deliver more balanced news to break down the political filter bubble inside tools like Facebook and YouTube.

Just as Boeing has proved it cannot regulate itself, neither can Meta or Google. Social media is too addictive to be left in the profit-focused hands of a corporation. In the absence of regulation, addiction economics always generates more addictive products.

There should be regulators on-site at every social media company. Every algorithmic change should be subject to review. If it makes the product more addictive to children, or more likely to generate violence, or if it fuels partisanship, it should be vetoed or changed.

Until pro-social regulators are a part of algorithmic choices at major social media companies, they’ll become more and more addictive. And no warning label will make much difference.

I’d love for regulators to approve algorithm changes but I’m skeptical.

I’m reminded of the new book The Everything War: Amazon’s Ruthless Quest to Own the World and Remake Corporate Power by Dana Mattioli. One eye-opener: Amazon successfully killed legislation to prevent the very things its management said it wasn’t doing. I fear that social media companies would be similarly successful.

Josh, from this father’s perspective, it isn’t solely the algorithm that’s the problem but the permissible content. Much of what I see on Instagram would violate “PG” or “R” cinematic ratings was it subject to similar scrutiny. Have we done all we can to protect our children? I think we all know the answer.