Some questions about deplatforming the Parler platform

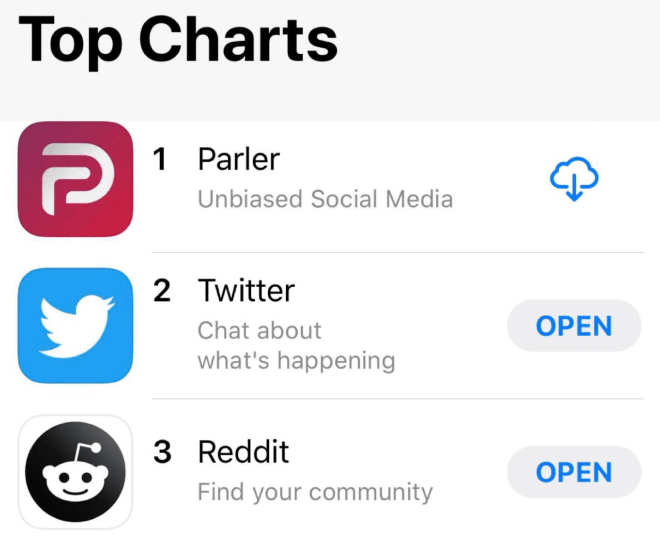

Trump supporters have been decamping to Parler, a social network started as an alternative to Twitter and Facebook. Now, due to decisions by Apple, Google, and Amazon, Parler has had to shut down. Is this fair? Is it right? Or is it a threat to open operation of the internet?

After the invasion of the US Capitol building last week, Twitter, Facebook, Shopify, and other social networks and vendors shut down President Trump’s accounts. The rationale was that he had incited and would likely continue to incite violence. Regardless of what you think of these decisions, it was well within the purview of these companies to make them. They decide what is allowed to exist on their platforms, and incitements to violence are clear violations of their terms of service.

The case of Parler is different. The idea of vendors shutting down entire social networks because they don’t like their politics and policies is troubling. Because it’s a complicated issue, I’ll explore it in some detail, in the form of a Q & A.

What is Parler?

Parler is a social network launched in 2018 as an alternative to Twitter and Facebook. Its founders launched it in reaction to policies and actions on mainstream social networks that they perceived as interfering with conservative and Trumpist points of view.

Parler described itself as a “free speech” alternative to mainstream social networks, with less censorship. It eventually grew to 4 million active users.

Is this a typical “disruptive innovation?”

If you look closely at startups that are described as “disruptive,” they tend to flout some rule in the name of serving customers better. For example, Uber evaded taxi licensing rules, Airbnb evaded hotel taxes and licensing, and YouTube flouted copyright protection rules. (This is how they start — after a while, they get big and then come to some accommodation with the rules.)

The rule that Parler flouted was that social networks needed to have policies and procedures that block certain types of content. Or at least, that was the positioning.

What was really going on at Parler?

As you might imagine, with such lax policies, Parler was filled with pornography and hucksters of all kinds.

But as it turns out, the “free speech” policy wasn’t what was actually happening. Parler implemented obscenity and violence policies. And it had hundreds of volunteer content moderators who tended to have conservative points of view. People expressing liberal points of view found themselves banned.

Parler evolved into a safe space for conservative and Trump-supporting perspectives.

Why did Apple and Google take action against Parler’s apps?

As of now, the Apple app store and the Google Play store have removed the Parler app.

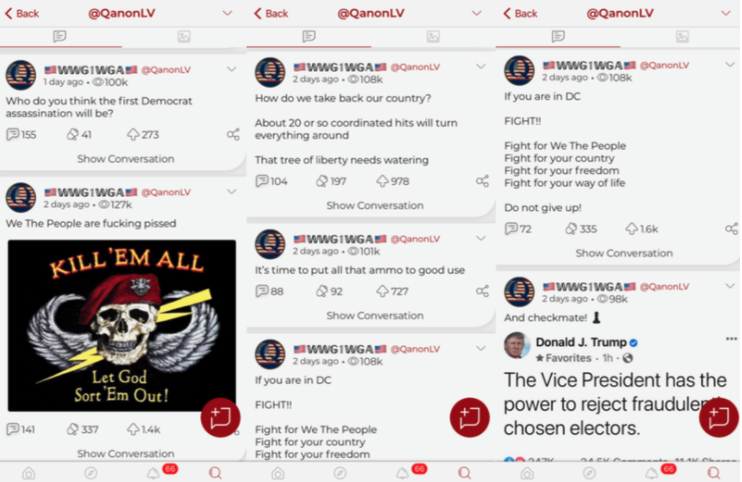

Apple does not allow apps that feature pornography or incite violence. Apple’s statement to Parler said “We have received numerous complaints regarding objectionable content in your Parler service, accusations that the Parler app was used to plan, coordinate, and facilitate the illegal activities in Washington D.C. on January 6, 2021 that led (among other things) to loss of life, numerous injuries, and the destruction of property. . . The app also appears to continue to be used to plan and facilitate yet further illegal and dangerous activities.” For example, here’s a screen shot from the service:

Apple requested a moderation plan within 24 hours, and when it didn’t receive one, kicked the app off the service.

Google’s actions were similar. “We’re aware of continued posting in the Parler app that seeks to incite ongoing violence in the US,” a Google spokesman told Ars Technica. “In light of this ongoing and urgent public safety threat, we are suspending the app’s listings from the Play Store until it addresses these issues.”

Parler’s CEO was defiant. He posted this on Parler: “We will not cave to pressure from anti-competitive actors! We will and always have enforced our rules against violence and illegal activity. But we WONT cave to politically motivated companies and those authoritarians who hate free speech!”

How and why did Amazon make it difficult or impossible for Parler to operate?

Even without apps, Parler could continue — users could just go to its Web page on their browser, on a PC, a phone, or a tablet.

However, like many other popular internet applications, Parler operated on a set of rented virtual servers: Amazon’s cloud service, known as Amazon Web Services or AWS. When you interacted with Parler, you were actually interacting with code running on Amazon’s servers.

This past Saturday, AWS threatened to suspend Parler’s ability to use its servers. As AWS’ Trust and Safety team explained in an email, “Recently, we’ve seen a steady increase in . . . violent content on your website, all of which violates our terms. . . . It’s clear that Parler does not have an effective process to comply with the AWS terms of service. . . . Because Parler cannot comply with our terms of service and poses a very real risk to public safety, we plan to suspend Parler’s account effective Sunday, January 10th, at 11:59PM PST. ” The complaint included this screen shot as an example of the threats of violence:

The AWS terms of service say:

You may not use, or encourage, promote, facilitate or instruct others to use, the Services or AWS Site for any illegal, harmful, fraudulent, infringing or offensive use, or to transmit, store, display, distribute or otherwise make available content that is illegal, harmful, fraudulent, infringing or offensive. Prohibited activities or content include:

Illegal, Harmful or Fraudulent Activities. Any activities that are illegal, that violate the rights of others, or that may be harmful to others, our operations or reputation . . .

AWS apparently determined that allowing posts inciting violence was “offensive” and “may be harmful to others.”

AWS, as a crucial part of internet infrastructure, hosts all sorts of content. It has far more permissive policies than, say, Facebook, or the app stores on Apple and Android. This makes sense. Nudity is legal to put online, but is banned from Facebook, because their policy is to keep the site free of buttocks, penises, and women’s nipples. Apple similarly won’t host porn apps and has taken down politically sensitive content.

But AWS hosts all sorts of things, and because it operates behind the scenes, you’d never know it. Obviously, AWS has to take down things that are illegal, such as child pornography, but it generally doesn’t get involved in content moderation.

Couldn’t Parler move to other web servers?

In theory, it could. In practice, migrating the content off of AWS would be a huge job.

And it wasn’t just AWS. Now nearly all of AWS’ technology vendors have rejected it. As its CEO John Matze wrote yesterday:

We will likely be down longer than expected. This is not due to software restrictions—we have our software and everyone’s data ready to go. Rather it’s that Amazon’s, Google’s and Apple’s statements to the press about dropping our access has caused most of our other vendors to drop their support for us as well. And most people with enough servers to host us have shut their doors to us. We will update everyone and update the press when we are back online.

Again, there is precedent. For example, Cloudflare, the cloud security company, dumped 8chan after it hosted a manifesto from a mass shooter in El Paso.

In the case of Parler, the company will have to replicate everything it does — from email updates to security to hosting and internet distribution — with vendors that don’t mind its policies. That will take many months, if it’s even possible at all.

Why did this happen now?

There are two ways to look at it. Both have some truth to them.

For one thing, the attack on the Capitol was horrifying. If there was ever a time for a tech company to reconsider its role in dangerous real-world events, now is that time.

There is also risk moving forward. It’s clear from some of the posts that have been shared in news accounts that the types of people who planned the Capitol attack are also planning attacks on or around Biden’s inauguration a week and half from now. If your company is hosting or enabling folks who are planning violence, that’s a good reason to be reconsidering what you do.

But the other way to look at the timing is political. When Trump is president and the Republicans control the Senate, it is politically problematic to regulate conservative and nationalist speech. Starting on January 20, Democrats will control the presidency and both houses of Congress. Tech companies that fail to rein in dangerous right-wing actors are likely to be subject to lots of criticism and potential regulation. The wind has shifted in Washington, and tech companies know it.

So where’s the problem here?

Every time an infrastructure company like AWS ejects a customer like Parler, a group of internet purists complains. And they should.

In theory, anyone can post anything they want on the internet.

In practice, every time you view something online, there are many providers working with the company that is serving the content. Somebody sold the domain name you typed into your browser. Somebody sold them or rented them servers. Someone is providing security software to fend off attacks. They probably programmed it in some language they bought a license for. In general, those providers don’t get involved with what you do with their tools. But if you’re doing things are illegal, they may have a problem with it.

It is very hard to draw the line. Here’s a thought experiment. Al is a drug dealer. Nancy contacts Al with her Gmail account using her Samsung phone on the Verizon network. Nancy asks Al about the availability of some weed.

Should Google read all of Nancy’s emails and check for illegal activity? If it detects it, should it alert law enforcement? Should it kick Nancy off of Gmail?

Should Verizon monitor all the traffic across its network and ban Nancy for illegal activity?

Should Samsung build tools into its phones that detect and prevent illegal activity like Nancy and Al’s?

Where should we draw the line?

I agree with the critics that cases of infrastructure providers shutting down speech should be very, very rare. I’d be extremely concerned if this sort of activity spreads to shutting down political speech that has nothing to do with violence.

It’s also problematic that the shutdown affects a social network. People post content on social networks, content that is difficult to monitor. There are always going to be cases where people post threats. It’s not as if John Matze threatened violence.

The First Amendment doesn’t apply — this isn’t about government regulation. But even if this is not a First Amendment case, it’s troubling. There are and ought to be as few limits on speech as possible.

Threatening violence is one such limit.

The Parler case sets a precedent:

- It repeatedly hosted speech inciting violence

- Its leaders minimized that threat, did not take it seriously, and refused to negotiate the improvements that its vendors requested to reduce the threat of violence.

- Its moderation policies and procedures were inadequate to the task of keeping violent people from coordinating their activities on the platform.

- Its inability to rein in such harm, if unchecked, would likely lead to further violence.

- The threat of violence was imminent.

I am hopeful that at Amazon and at every other technology vendor, there’s a discussion going on right now about the exact conditions under which they would ban a customer. Such conditions should be rare, clearly defined, and clearly dangerous. If such bans are perceived as arbitrary, that will damage the fabric of the Internet.

I’d also hope that every company creating and hosting user content is asking itself right now if its moderation policies are up to the task — or if it is at risk of similar action from its vendors.

If you host a bunch of violent kooks intent on mayhem — and you repeatedly do nothing about it — your vendors are going to abandon you. And I’m okay with that. You should be able to say just about whatever you want on those platforms. But threats of coordinated, armed violence clearly cross the line.

Who is John Matzke?

Typo. John Matze is the CEO of Parler.

The CEO of Parler

I agree “threats of coordinated, armed violence clearly cross a line”, but I disagree with the conclusion that tech censorship is the solution, even if its implementation were practical (it’s not). The root of the problem is online anonymity. Instead users should be held responsible for their postings and prosecuted for threats of violence.

It’s not as if Trump and his supporters do not have other communication outlets. They could hold press conferences and respond to questions about the veracity of their claims. They could write editorials in local newspapers and be prepared to respond to questions about their positions.

If Amazon does nothing, then it faces its own liability—particularly since Section 230 may change.

You are missing several big issues here:

— There are much worse things on other Social Media platforms that AWS did not take action against because Amazon agrees with the politics of those social media providers. If some Leftist says something much worse on Twitter than was said on Parler, it is simply overlooked or not taken seriously. It is a hypocritical double-standard driven by hysteria.

— What constitutes “incitement to violence” is defined at the arbitrary whim of the infrastructure provider. This demonstrates how meaningless whatever “reason” they use to deplatform Parler is. If Amazon decides that Donald Trump saying “have a nice day” on Parler is incitement to violence, and as a result deplatforms parler, Amazon is perfectly within it’s right to do so. NONE of the things that Amazon is saying was “incitement” would ever hold up in a court of law as evidence of incitement.

— The leaders of Parler take threats of violence very seriously. Not agreeing that a vague, innocuous comment is “incitement” is not the same as not taking it seriously. Amazon’s Trump Derangement Syndrome does not magically make an innocuous statement into something violent or into incitement.

— Other politicians besides Trump have said MUCH worse things than what was said on Parler, and still have their social media accounts. While this isn’t an infrastructure issue, it puts the double-standard in great perspective.

— Amazon did not give Parler a period of notice to get all of its data off of their servers. This has to be a breach of contract. If they would have given Parler the customary 30 days, that would have been fine.

Not quite. Your facts are wrong.

Facebook, Twitter, Instagram, and Google don’t use AWS. So it couldn’t take action against them. Were you thinking of the pernicious plotting taking place on, say, Pinterest?

Amazon has posted dozens of examples of plotting violence in its response to the Parler lawsuit.

“Other people do it, too” is not an argument. And in any case, I think Trump has more followers than any of the others.

Amazon has been warning Parler since November.

No, my facts are right, I only mentioned Twitter, because that is the site most like Parler. See here: https://www.bloomberg.com/news/articles/2020-12-15/twitter-will-use-amazon-web-services-to-power-user-feeds Also, there are other sites that AWS hosts where they simply don’t care about violence as long as the site follows the “correct” politics.

As I said, Amazon defining “plotting violence” at its own arbitrary whim does not constitute plotting violence. Anything that would actually constitute that was removed from Parler. That’s the rub — anything that Parler looked at that would actually hold up in court as “plotting violence”, Parler had removed, and more. And reported these things to Law Enforcement. Those things were removed using Parler’s own standards, not just Amazon’s standards.

As I also said, there were worse examples than Parler on Twitter, yet AWS is hosting Twitter, and did not demand that Twitter ban the politicians who had said much explicitly worse things than Trump.

A “warning” does not constitute “30 days notice”. Parler’s contract explicitly said that AWS must give them 30 days notice, and then they can terminate the contract for ANY reason. Just because Lefties are in a hysteria where they think Donald Trump saying “having a nice day” is incitement to violence, that doesn’t give them the right to arbitrarily breach contracts.

Still wrong. The article you site is about what Twitter will do in the future. It does not use AWS now.

Check Amazon’s response to Parler’s lawsuit. It “highlights more than a dozen examples that Amazon said it reported to Parler, including calls for a civil war and the deaths of Democratic lawmakers; tech company CEOs including Jeff Bezos, Mark Zuckerberg and Jack Dorsey; members of professional sports leagues; former Transportation Secretary Elaine Chao; and US Capitol Police, among others.”

See https://www.cnn.com/2021/01/12/tech/amazon-parler-section-230/index.html

AWS’s actions in the case of Parler are a bridge too far.

Without free speech and open dialog, tyranny and corruption are made easier. I may not like what people may say, but I will always defend their right to say it. Bad ideas always lose out to good ones, unless people don’t have a chance to have dialog about them.

I’ll gladly re-join Parler when it’s eventually back to be one of the people that pushes back on the bad ideas.