Newsletter 1 November 2023: AI regulation fail; Scholastic reversal; blocking deep fakes

Newsletter Week 16: The president’s executive order on AI is incoherent, plus Scholastic ends its book ghetto, attempting to outlaw image theft, three people to follow, three books to read, and my book makes the Inc. Non-Obvious longlist.

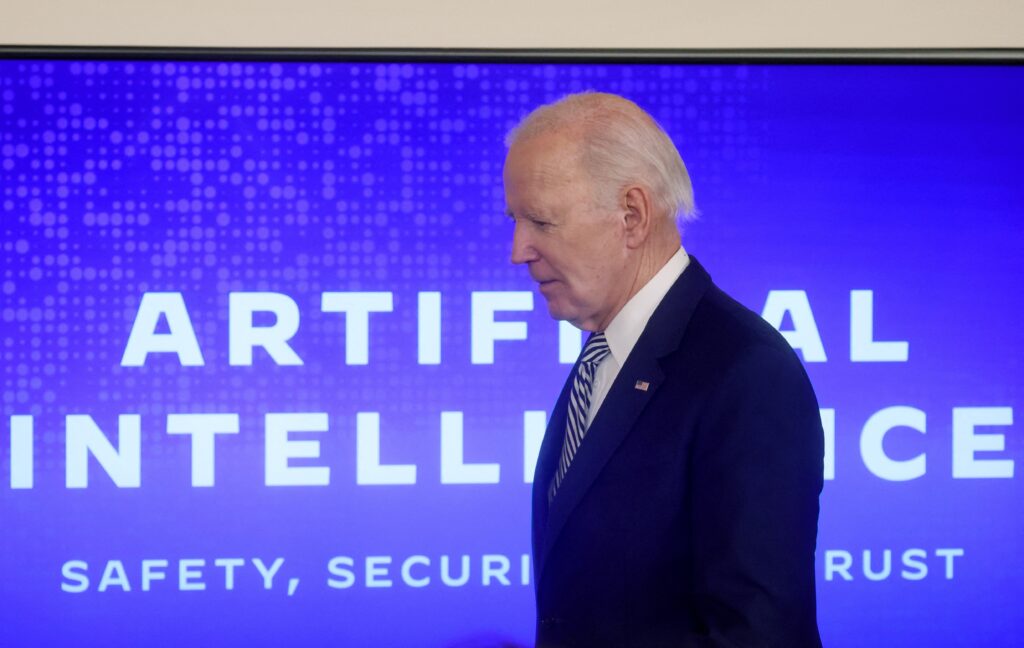

What’s wrong with Joe Biden’s AI executive order

Two weeks ago, I complained that Marc Andreessen’s “Tech-Optimist Manifesto” was feckless. So you’d think I’d be in favor of AI regulation. But regulating AI isn’t easy — as you can see from the Biden Administration’s barely coherent “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.”

If you like reading stuff like this, get into a nice cosy spot, because the executive order is 20,000 words long. So how do I know it’s incoherent? Start with the generic platitudes:

Section 1. Purpose. Artificial intelligence (AI) holds extraordinary potential for both promise and peril. Responsible AI use has the potential to help solve urgent challenges while making our world more prosperous, productive, innovative, and secure. At the same time, irresponsible use could exacerbate societal harms such as fraud, discrimination, bias, and disinformation; displace and disempower workers; stifle competition; and pose risks to national security. Harnessing AI for good and realizing its myriad benefits requires mitigating its substantial risks. This endeavor demands a society-wide effort that includes government, the private sector, academia, and civil society.

My Administration places the highest urgency on governing the development and use of AI safely and responsibly, and is therefore advancing a coordinated, Federal Government-wide approach to doing so. The rapid speed at which AI capabilities are advancing compels the United States to lead in this moment for the sake of our security, economy, and society.

Here are Biden’s eight guiding principles for regulating AI:

Artificial Intelligence must be safe and secure.

Promoting responsible innovation, competition, and collaboration will allow the United States to lead in AI and unlock the technology’s potential to solve some of society’s most difficult challenges.

The responsible development and use of AI require a commitment to supporting American workers.

Artificial Intelligence policies must be consistent with my Administration’s dedication to advancing equity and civil rights.

The interests of Americans who increasingly use, interact with, or purchase AI and AI-enabled products in their daily lives must be protected.

Americans’ privacy and civil liberties must be protected as AI continues advancing.

It is important to manage the risks from the Federal Government’s own use of AI and increase its internal capacity to regulate, govern, and support responsible use of AI to deliver better results for Americans.

The Federal Government should lead the way to global societal, economic, and technological progress, as the United States has in previous eras of disruptive innovation and change.

This is motherhood, apple pie, and self-contradictory. We’re going to be safe, cheerlead, employ Americans, be fair, worry, and lead. Got it?

Of course, the devil is in the details — and this topic is devilishly detailed. Luckily, the administration has managed in this document to do what no one in computer science or technology has done before: to define Artificial Intelligence. Specifically:

The term “artificial intelligence” or “AI” [means] a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing real or virtual environments. Artificial intelligence systems use machine- and human-based inputs to perceive real and virtual environments; abstract such perceptions into models through analysis in an automated manner; and use model inference to formulate options for information or action.

By that definition, which of the following is not AI?

- ChatGPT

- Amazon

- C++

- You, sitting at a keyboard in front of a stock-trading application

- An aircraft engine

- The power grid

The whole house of cards is built on this vagueness, such as:

The term “AI model” means a component of an information system that implements AI technology and uses computational, statistical, or machine-learning techniques to produce outputs from a given set of inputs.

The term “AI system” means any data system, software, hardware, application, tool, or utility that operates in whole or in part using AI.

The term “generative AI” means the class of AI models that emulate the structure and characteristics of input data in order to generate derived synthetic content. This can include images, videos, audio, text, and other digital content.

I am reminded of justice Potter Stewart’s definition of pornography: “I know it when I see it.”

I do not pretend to be sophisticated enough to be able to fix this problem. But the question is whether I trust federal regulators and bureaucrats to know how to regulate AI based on this definition.

I don’t. Do you?

News for authors and others who think

Scholastic has reversed its plan to put books on racial and sexual themes into a separate ghetto to make it easier for school districts to ban them. They’ll lose business this way, but that’s better than selling their souls.

A proposed “No Fakes Act” would make it a crime to use people’s likenesses for AI purposes without their permission. Good idea, but pretty hard to police.

Three people to follow

Tracy Swedlow, TV industry observer and proprietor of the TV of Tomorrow show.

David Reed, provocative and incisive AI skeptic.

Shel Holtz, wizard of internal communications.

Three books to read

Ignore Everybody: and 39 Other Keys To Creativity by Hugh McLeod (Portfolio, 2009). Acidly sarcastic and revealing cartoons about corporate life and being human.

Invisible Robots In the Quiet of the Night: How AI and Automation Will Restructure the Workforce by Craig Le Clair (Forrester, 2019). He’s been thinking about automation a long time, and it shows.

Academia Obscura: The Hidden Silly Side of Higher Education by Glen Wright (Unbound, 2017). If you’re still in academe, this might make you think twice about staying.

Centiplug

Build a Better Business Book is No. 9 of 100 books on the Inc. Non-Obvious longlist of the best business books. (Yes, it’s in alphabetical order, but still!)

Josh, your rebuttal to the AI executive order is also vague. Good grief! So, who SHOULD regulate AI?

You have a point. The feds should regulate, but I’d be happier if I had some clue how they would do that properly. Probably requires legislation. And that seems pretty close to impossible.

Not convinced by your critique of the Executive Order. Obviously it Whereas’s everything under the sun, that’s the conceit of the genre, what negative effects do you anticipate from this “self-contradictory” “incoherence”? (And which/how would a unified, oversimplified view of the outcomes/priorities be an improvement?)

The definition seems to suggest that AI includes anything operating based on greater than or equal to your hobby horse of “the algorithm”. This actually seems straightforwardly sensible to me.