Ethan Mollick required his students to use ChatGPT. Their experience is a model for the future.

Ethan Mollick, associate professor at Penn’s Wharton School, required his students to use the AI writing tool ChatGPT in his classes last semester. You should definitely check out his description of the experience; it’s full of real-world insights that are far more valuable than the noodlings of naysayers.

Start with the intention to learn

Unimaginative professors want to block students from using AI writers. This is as misguided as telling students they can’t use spell-checkers. ChatGPT and similar systems are tools, and students will use these tools, as will real-world writers.

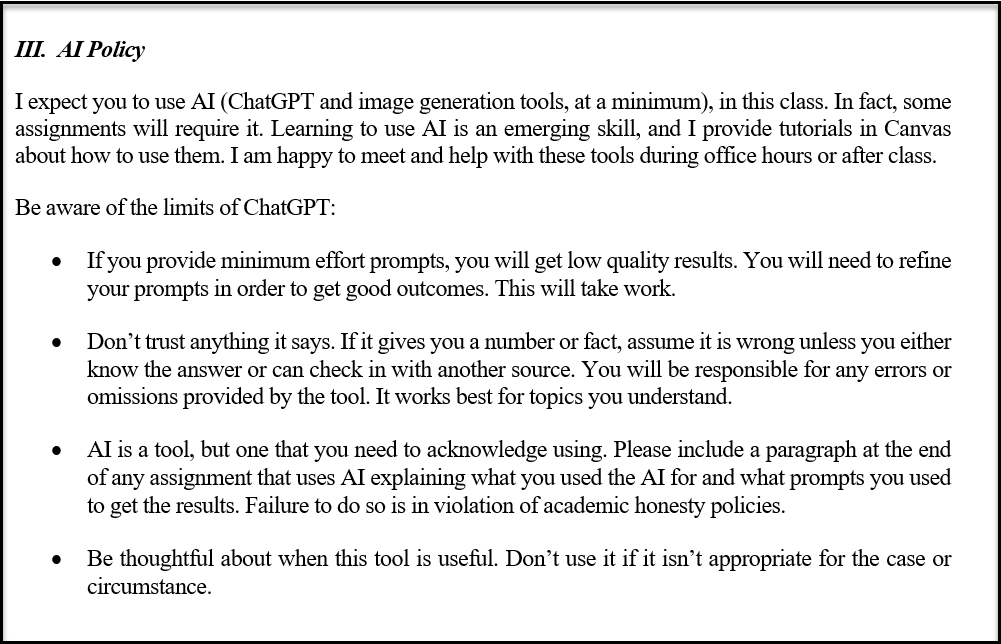

But there is a need for policy. Here’s Mollick’s, which is excellent:

This puts the focus on learning to use AI creatively, engineering good prompts, checking facts, and documenting the tools you use. These are the skills students (and everyone) needs, so they belong in any class that requires analytical thinking and writing.

Key insights from Mollick’s classes

Here’s what I took away from Mollick’s description. First, these tools are conversational. Your first prompt won’t give the best results — you must refine it. According to Mollick, the best approach is what he calls co-editing: refining the prompt based on the weaknesses in what the AI generates. Here’s an example:

- Generate a 5 paragraph essay on selecting leaders

- That is good, but the third paragraph isn’t right. The babble effect is that whoever talks the most is made leader. Correct that and add more details about how it is used. Add an example to paragraph 2

- The example in paragraph 2 isn’t right, presidential elections are held every 4 years. Make the tone of the last paragraph more interesting. Don’t use the phrase “in conclusion”

- Give me three possible examples I could use for paragraph 4, and make sure they include more storytelling and more vivid language. Do not use examples that feature only men.

- Add the paragraph back to the story, swap out the second paragraph for a paragraph about personal leadership style. Fix the final paragraph so it ends on a hopeful note.

This is what Mollick calls “prompt-crafting,” and it’s an essential skill for all writers.

The other fascinating insight is that for students to review and check the accuracy of purported facts in AI-generated writing is an effective pedagogical technique. As he writes:

Students understood the unreliability of AI very quickly, and took seriously my policy that they are responsible for the facts in their essays. It was clear that they carefully checked the assertions in the AI work (another learning opportunity!), and many reported finding the usual hallucinations — made up stories, made up citations — though the degree to which these problems were overt varied from prompt to prompt. The most interesting fact-checks were the ones focused on subtle differences (“it captured the basic facts of the example, but not the nuance”), suggesting deep engagement with the underlying concepts.

Teaching tools is just as important as teaching skills

If your job is to do what a machine can do just as well or better, you’ll soon be out of a job. Nobody is tightening screws on an assembly line, setting blocks of type on a printing press, or doing math calculations with a pencil any more.

Instead, we need to teach people to use the tools effectively. In the examples I just cited, that means maintaining robotic assembly lines, designing type laid out by software, and building clever calculations in spreadsheets. And in the case of AI, that means what Mollick is now teaching: engineering effective prompts and improving AI-generated text by engaging with the concepts in it.

I’d go further, and say we need to teach writers how to create fascinating text, deploy humor and drama, research interesting stories, and distinguish real insights from the banal and generic. Teaching with ChatGPT or similar systems is a great way to do that — and more accurately reflects how people will now be writing in professional settings.

You can stand hip-deep on the beach and try and fight the tide. Or you can learn to swim and use it to carry you where you want to go. And the smart people aren’t the ones standing on the shore shouting about the tide, either.

You’re spot-on that the challenge for people who already know how to write is to teach them how to use these newly invented writing tools that do, if not yet perfectly, what people have done manually until now. Kudos to Professor Mollick for trying to solve that, and kudos to you for your immediate recognition that fighting the use of the A.I. writing tools is nuts.

But there’s a whole dimension to the problem of educating people in a world where A.I. writing tools have been invented that I haven’t seen addressed. The students being taught to use ChatGPT in college today have already had 12 or more years of learning to write the old-fashioned way. Presumably they use those writing capabilities in the course of learning to use the new A.I. writing tools. My question is how should the education of younger kids who haven’t yet learned to compose anything themselves be modified now that A.I. writing tools have started to become available?

Consider the analogous problem of the invention of the calculator. Is it okay for kids growing up to not learn basic arithmetic because calculators can easily do what we used to do manually? Or do kids today still need to be taught and learn to do those basic computations manually as a foundation for dealing with problems that have a quantitative component, even though thanks to calculators in real life they won’t need to do those basic arithmetic calculations manually outside of school?

Apply this to a world that now has A.I. writing tools. What will need to be taught to young kids about writing to make them competent in a world where A.I. writing tools exist? Is it different from what students have always needed to learn up to the very recent introduction of A.I. writing tools?

[…] professor at Penn’s Wharton University, appeared to get it appropriate very last semester when he needed pupils to use ChatGPT but with suggestions — good-tune your prompts, fact check your assertions, disclose the character of your AI use, and […]