Does using AI dull your critical thinking skills?

Researchers at Carnegie Mellon and Microsoft Research in the UK have completed a study to determine how generative AI affects critical thinking. Not surprisingly, knowledge workers that use AI feel they are less likely to be thinking critically.

The researchers surveyed digital workers by explaining what critical thinking was and then asking them if they engaged in it while conducting tasks with the aid of generative AI tools. Since these impressions are self-reported, you would expect a bias: people tend to believe they are doing critical thinking more than they actually do. But despite that bias, people reported leaning on AI as a crutch and substitute for actual thinking.

Here’s the text of the report’s conclusion:

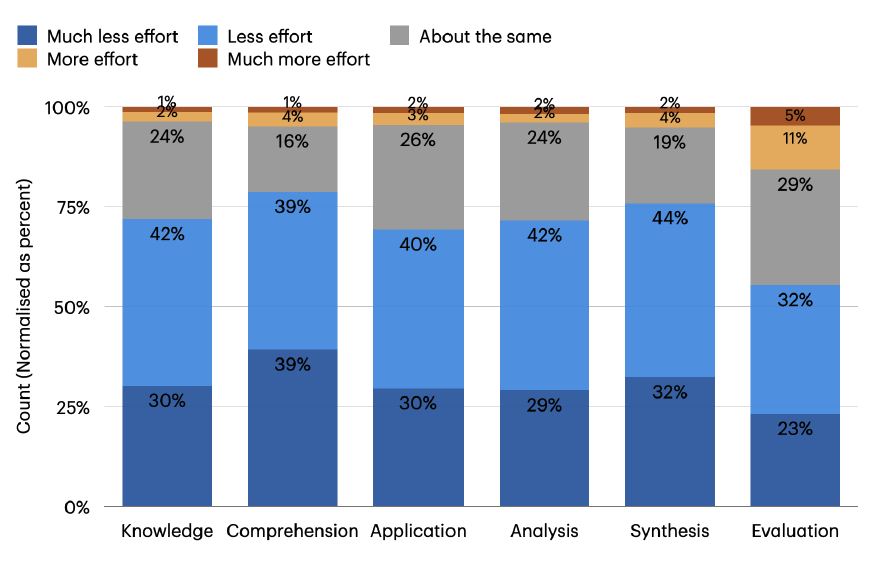

We surveyed 319 knowledge workers who use GenAI tools (e.g., ChatGPT, Copilot) at work at least once per week, to model how they enact critical thinking when using GenAI tools, and how GenAI affects their perceived effort of thinking critically. Analysing 936 real-world GenAI tool use examples our participants shared, we find that knowledge workers engage in critical thinking primarily to ensure the quality of their work, e.g. by verifying outputs against external sources. Moreover, while GenAI can improve worker efficiency, it can inhibit critical engagement with work and can potentially lead to long-term overreliance on the tool and diminished skill for independent problem-solving. Higher confidence in GenAI’s ability to perform a task is related to less critical thinking effort. When using GenAI tools, the effort invested in critical thinking shifts from information gathering to information verification; from problem-solving to AI response integration; and from task execution to task stewardship. Knowledge workers face new challenges in critical thinking as they incorporate GenAI into their knowledge workflows. To that end, our work suggests that GenAI tools need to be designed to support knowledge workers’ critical thinking by addressing their awareness, motivation, and ability barriers.

AI should stimulate thought, not simulate it

AI is supposed to make work easier, and it clearly is. What’s the big deal?

The question come from how you define “work.”

If work means producing content or analyses to hit some sort of goal (e.g. “Finish the report by the end of the week”), then AI risks automating meaningless tasks. The less the human brain is engaged, the less likely the result is to be actually useful and helpful in understanding the world or accomplishing organizational goals.

If the work means acquiring insight or communicating something meaningful, then AI can help people to prepare, gather information, brainstorm, test hypotheses, and reach conclusions. But the key here is the word “help.” It’s a tool, not a substitute for thinking.

Part of the issue is that AI tools are designed to mimic human conversation. This makes people feel as if they are conversing with a thinking being, which in turn causes them to trust the results they see. That’s unfortunate and risky.

If AI tools were designed to make you think more, rather than less, that might help.

In the meantime, practice using prompts like this:

- What am I missing here?

- What are some other ways to think about this?

- How likely is it that these results are wrong? If so, how would I investigate that?

- You wrote Xxxx. What makes you believe that? What’s your evidence?

- What’s the best source for perspectives on this topic?

In case your AI isn’t provocative enough, I’ll keep provoking you. But there’s only so much I can do. So get in the habit of provoking yourself.

Josh, if what I’m seeing is typical, it goes the other direction. Diminished critical thinking skills lead to the use of, and sometimes reliance on, AI tools. It’s still more work to check AI output for errors than it is to write something original based on knowledge and trusted sources.

Does the provocation need to change because of AI?

Will anyone do a before/after or after controlled study?

Did they include a hypothesis on causation or the direction of influence?