A better solution to fake news, starting with Facebook

Fake news is a big problem, and Facebook is at the center of it. It’s clear that made-up and distorted stories amplified on Facebook helped swing the 2016 presidential election. But the simple and “obvious” solutions to the problem have backfired. Now Facebook is at work on something better. And I have a proposal based on their suggestion.

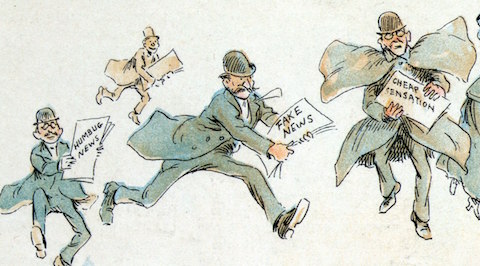

The term “fake news” should have a meaning: purported news items that are false, contradicted by facts, and unverified. (Despite Donald Trump’s tweets, “fake news” does not mean “news I disagree with.”) The challenge is that social media algorithms spread items that people share, comment on, and react to, regardless of whether they are true. So the more outrageous the news, the more the algorithm likes it. This judgment improves engagement and ad values at the price of undermining our trust in what we read.

I had proposed a system where people could challenge the veracity of news items, and Facebook could flag them based on how trustworthy those challenging the stories were. Facebook implemented something with a similar effect, based on the opinion of media fact-checkers. But as Facebook product manager Tessa Lyons described in a recent post, the effects weren’t quite as the company expected:

News Feed FYI: Replacing Disputed Flags with Related Articles

By Tessa Lyons, Product Manager

Facebook is about connecting you to the people that matter most. And discussing the news can be one way to start a meaningful conversation with friends or family. It’s why helping to ensure that you get accurate information on Facebook is so important to us.

Today, we’re announcing two changes which we believe will help in our fight against false news. First, we will no longer use Disputed Flags to identify false news. Instead we’ll use Related Articles to help give people more context about the story. Here’s why.

Academic research on correcting misinformation has shown that putting a strong image, like a red flag, next to an article may actually entrench deeply held beliefs – the opposite effect to what we intended. Related Articles, by contrast, are simply designed to give more context, which our research has shown is a more effective way to help people get to the facts. Indeed, we’ve found that when we show Related Articles next to a false news story, it leads to fewer shares than when the Disputed Flag is shown.

Second, we are starting a new initiative to better understand how people decide whether information is accurate or not based on the news sources they depend upon. This will not directly impact News Feed in the near term. However, it may help us better measure our success in improving the quality of information on Facebook over time.

False news undermines the unique value that Facebook offers: the ability for you to connect with family and friends in meaningful ways. It’s why we’re investing in better technology and more people to help prevent the spread of misinformation. Overall, we’re making progress. Demoting false news (as identified by fact-checkers) is one of our best weapons because demoted articles typically lose 80 percent of their traffic. This destroys the economic incentives spammers and troll farms have to generate these articles in the first place.

But there’s much more to do. By showing Related Articles rather than Disputed Flags we can help give people better context. And understanding how people decide what’s false and what’s not will be crucial to our success over time. Please keep giving us your feedback because we’ll be redoubling our efforts in 2018.

The solution to the fake news problem is now in view

It’s appalling but perhaps not that surprising that identifying an article as “disputed” creates more readership. I thought flagging fake news would be a good strategy, and Facebook obviously did as well. Turns out it’s not — we all want to read what’s supposedly fake and register our outrage or support.

Certainly showing related articles to provide context will help, but it’s not going to solve the problem. It will make people who want to be educated smarter. That’s a tiny part of the problem, and it doesn’t help people for whom critical thinking is a lost skill.

But the most encouraging part of this statement is here:

Demoting false news (as identified by fact-checkers) is one of our best weapons because demoted articles typically lose 80 percent of their traffic. This destroys the economic incentives spammers and troll farms have to generate these articles in the first place.

This is the fix we need; it would put the troll farms out of business and could provide the needed algorithmic antidote to those who would disrupt the information flow prior in the 2018 and 2020 elections.

But fact-checkers cannot possibly deal with the flood of false information out there. So I propose another simple solution that could actually work:

- Provide a “Fake” reaction alongside “Haha,” “Sad,” and “Angry.”

- Weight “Fake” reactions higher in the algorithm if the person who uses them also includes a comment with a link to a fact-checking site or other evidence of falsehood.

- Weight “Fake” reactions higher if the person who posts them not only documents them this way, but has a history of identifying fake news from all political viewpoints, not just one side.

The net effect of this would be to elevate a class of citizen fact-checkers on Facebook, those who identify fake news on all sides whenever they see it and document their work. Like well-respected editors on Wikipedia, those fake-finders would enable Facebook to identify what are likely to be fake stories. And having done so, Facebook should:

- Demote fake stories in the timelines of most people (show them far less frequently).

- Promote fake stories in the timelines of those who have proven themselves as citizen fact-checkers (show them more frequently), so they can identify and label even more fake stories.

This suggested change is completely algorithmic. It rewards diligence rather than partisan bias. It’s very Facebook. And it would work.

Josh, How would you prevent the cranks, spammers and Astroturfers from clicking fake buttons when they don’t like what was posted?

This is where the power of social comes in. If you are a crank or astroturfer, the weight of your clicking on the Fake button is NIL. To have it matter in terms of promotion and demotion of content, you have to be the kind of person who actually cites legit sources and critiques from both sides. That takes work. And as a result, those opinions will matter only for those who put in the work.

I suggested something similar about a year ago, and pointed out we have the perfect emoji for fake news — the pile of poo. 🙂

Unfortunately, Shel has identified a real weakness in an otherwise great idea. The very wealthy backers of fake news designed to influence political decisions in their interests will hire “those who (will) put in the work.” It still is worth trying. The real solution is providing free public education that teaches people how to think rationally in spite of our under-developed and mostly unrecognized emotional overrides. There are some marvelous books out there, but they are NOT accessible to the people who would benefit most from understanding how we make “bad” decisions. We need a new generation of educators/entertainers who use social media to reach and influence the people who inadvertantly allow the “haves” to put the futures of all our children in jeopardy.

I did a reverse image source on a news story in FB. The image in the Red Elephant was not from the event described in the article-a protest in Malvo, Sweden in December. The image was from another city, another protest in September. The image exaggerated the intensity of the protest. Would you mark that fake?

Yes, I would. The diversity of different ways things can be fake are the reason that humans are necessary in the algorithm.