Amazing! Facebook solves fake news problem! (Not really.)

The problem of fake news on Facebook is now a news story. The last few days have seen tons of articles about it, most misleading or wrong. Ironically, these are exactly the kind of articles that spread virally, with misleading headlines. The headlines I show here are real, only the articles don’t match the headlines (yup, clickbait). None of these “solutions” solves the problem. (My earlier post for background describes the problem in detail.)

Google and Facebook take aim at fake news

This was the misleading headline in the Wall Street Journal. The subtitle makes it sound like Sundar Pichai and Mark Zuckerberg flipped a switch and solved the problem: “Companies address spread of misinformation after false news stories became an issue during the presidential election.”

What actually happened? Facebook and Google stopped taking ads from fake news sites. Reading this made me angry.

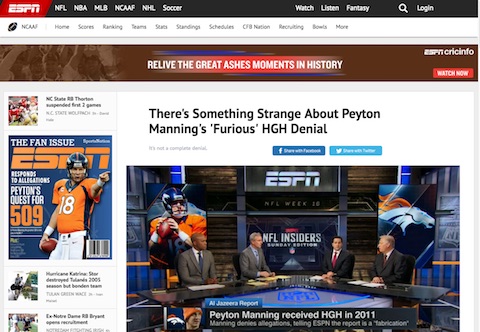

Facebook has had a pernicious fake ad problem for years. The ads lead to sites like the one shown here, that appear to be ESPN or another legitimate site but are actually selling something like dietary supplements. These are easy to spot. Why did Facebook wait until now to ban them?

Facebook has had a pernicious fake ad problem for years. The ads lead to sites like the one shown here, that appear to be ESPN or another legitimate site but are actually selling something like dietary supplements. These are easy to spot. Why did Facebook wait until now to ban them?

This action does nothing to help the newsfeed, which is where fake news proliferates. Here’s the non-answer from the Facebook spokesperson, as described in the article:

Facebook’s move doesn’t address the fake news that appears in users’ news feeds, the focus of criticism of the social network. The Facebook spokesman couldn’t specify the signals its software uses to identify fake news sites, or when it will also ask people to review the sites. He also couldn’t say why Facebook couldn’t use similar technology to stamp out fake news on its news feed.

Here’s what I believe. First, they can’t accurately identify all fake news stories, or separate parody from the intent to mislead, so they can’t fix the problem. Second, it’s a political nightmare, in case they mark an obviously wrong Breitbart article as fake and president’s new chief strategist takes offense. Third, they don’t know how to mark fake news in the feed — they don’t want to just delete or ban it, but they have not yet figured out how to indicate it, and they don’t change the interface without lots of testing. So they float this story about fake ads to mollify people who don’t read closely, a group that encompasses everybody. They know we’ll be sucked in because we’ve been sucked in by so much of the fake news already.

It took only 36 hours for these students to solve Facebook’s fake-news problem

That’s what it says in Business Insider. During a hackathon at Princeton, four students created a Chrome browser extension for truth.

Here’s how it works, according to Nabanita De, one of the students:

It classifies every post, be it pictures (Twitter snapshots), adult content pictures, fake links, malware links, fake news links as verified or non-verified using artificial intelligence.

For links, we take into account the website’s reputation, also query it against malware and phishing websites database and also take the content, search it on Google/Bing, retrieve searches with high confidence and summarize that link and show to the user. For pictures like Twitter snapshots, we convert the image to text, use the usernames mentioned in the tweet, to get all tweets of the user and check if current tweet was ever posted by the user.

That’s great, I salute them. However, a technique like that is extremely broad-brush. It may help find sites that are questionable — but new questionable sites pop up all the time. How will it treat satire? How will it treat political bias? And it does nothing about the links to the article that’s obsolete because it’s seven years out of date.

Good first step. But I don’t think any technique that simply reviews sites, without human input, can solve this problem satisfactorily.

Want to keep fake news out of your newsfeed? College professor creates list of sites to avoid.

Thanks L.A. Times, for showing us what sites to avoid. And thanks to Melissa Zimdars of Merrimac College for creating the list, currently at about 150 sites.

It’s a nice idea, but these sites proliferate so quickly that no human can keep a list up-to-date. There are way more than 150 of them. There’s also no provision for what to do if Prof. Zimdars includes a site that somebody else thinks isn’t fake. You can already see signs in her Google Doc that she’s backtracking. Her life is about to become a living hell as people protest her choices and suggest more.

You can’t fix this problem at the site level. What happens if you post fake news on Medium? Or LinkedIn? Or as a Forbes contributor?

A list of sites, no matter how good or well meaning, is not a solution to this problem.

Renegade Facebook Employees Form Task Force To Battle Fake News

According to Buzzfeed, dozens of employees are involved, although none would comment on the record. This was telling:

Or, as former Facebook designer Bobby Goodlatte wrote on his own Facebook wall on November 8, “Sadly, News Feed optimizes for engagement. As we’ve learned in this election, bullshit is highly engaging. A bias towards truth isn’t an impossible goal. Wikipedia, for instance, still bends towards the truth despite a massive audience. But it’s now clear that democracy suffers if our news environment incentivizes bullshit.”

Ironically, Buzzfeed gave this article a clickbait headline that overstates what’s actually happening. According to the article, there’s a loose collection of dissatisfied employees, not a “task force.” There’s no indication of official activity or engineering progress to solve the problem. Interesting, but pretty far from solving the problem.

Here’s How Facebook Actually Won Trump the Presidency

Wired says that the Trump-Pence campaign focused its ads on Facebook and learned from what resonated.

Fascinating. But that has nothing to do with fake news, even if the headline might make you think so.

Bullshit Detector plugin tells you when you’re reading fake news

This is new, from BGR. Looks promising, but for all the reasons I just shared, I don’t think any plugin can just read an article and mark it “true” or “false.” There’s no indication of how it works.

A hard problem will need a complex solution

People’s willingness to believe in simple solutions to complex problems is the reason they want to believe in fake news that they read. It’s also why they think it’s a simple problem to solve. It isn’t.

There is an opponent here. Fake news generates advertising revenues. It is also now an election tactic. Those who create it are wily and will elude simple technology fixes.

The media is turning on Facebook now. In The New York Times, Zeynep Tufekci writes:

Only Facebook has the data that can exactly reveal how fake news, hoaxes and misinformation spread, how much there is of it, who creates and who reads it, and how much influence it may have. Unfortunately, Facebook exercises complete control over access to this data by independent researchers. It’s as if tobacco companies controlled access to all medical and hospital records.

We need a solution, but it’s not going to be quick. Here’s how you’ll know when this problem is actually solved.

- The solution will include gradations of truth (satire, partly true, misleading, malicious, biased), not just “this is fake.”

- The solution will include a human element, similar to the way Wikipedia works. A.I. may identify shaky sites, but humans will need to back up and refine the algorithm and its choices.

- It won’t just be a browser plug-in, it will change the way site links look in Facebook.

Until you see all those elements in a proposed “solution,” the problem remains.

(Thanks to Facebook friends including Ted Schadler, who sent me links to a number of these articles.)

Perhaps we’re looking at this in reverse – or in science terms, type 1 vs type 2 errors. I don’t need a list of fake sites, I need a list of sites I can believe. It would be far easier to set up a system whereby a source or site earns a trust level and can display that designation on news stories. If it doesn’t have the designation, I ignore it. Yes people will still believe and redistribute nonsense and worse. But let’s face it, you can’t engineer out stupidity and laziness. If a source is new and unrated, their challenge will be to convince a source that is rated to carry their story (there could be gradations like conditional or preliminary ratings).

When I last looked, such sites were called “newspapers of record”.

C’mon people. Facebook can solve this problem in a heartbeat. It chose not to because it benefits financially from ad revenues. The Silicon Valley glitterati along with the mainstream media didn’t believe Trump would win and definitely didn’t believe that people would be fooled by fake news and image-based memes.

Fake news from Facebook and fake polls – ok, misleading polls – from the mainstream media.

At one end is Facebook as the gatekeeper of news for more than 40% of people with almost zero government oversight through regulations in the public interest. Facebook has a global ‘population’ of 1.8 billion. As an example, the New York Times printed paper circulation is about 600,000 per day and digital subscriptions are about 1m.

The fault lies with the media for giving up their distribution to Google initially and Facebook now. Secondly, to oversight bodies for not regulating Google, Facebook and Twitter. As an example, TV is heavily regulated and you would not see these fake news stories or ads appearing. Yet, Facebook easily has more viewers than the mainstream TV channels.